Complete Features

These features were completed when this image was assembled

Currently the Get started with on-premise host inventory quickstart gets delivered in the Core console. If we are going to keep it here we need to add the MCE or ACM operator as a prerequisite, otherwise it's very confusing.

Epic Goal

- Make it possible to disable the console operator at install time, while still having a supported+upgradeable cluster.

Why is this important?

- It's possible to disable console itself using spec.managementState in the console operator config. There is no way to remove the console operator, though. For clusters where an admin wants to completely remove console, we should give the option to disable the console operator as well.

Scenarios

- I'm an administrator who wants to minimize my OpenShift cluster footprint and who does not want the console installed on my cluster

Acceptance Criteria

- It is possible at install time to opt-out of having the console operator installed. Once the cluster comes up, the console operator is not running.

Dependencies (internal and external)

- Composable cluster installation

Previous Work (Optional):

- https://docs.google.com/document/d/1srswUYYHIbKT5PAC5ZuVos9T2rBnf7k0F1WV2zKUTrA/edit#heading=h.mduog8qznwz

- https://docs.google.com/presentation/d/1U2zYAyrNGBooGBuyQME8Xn905RvOPbVv3XFw3stddZw/edit#slide=id.g10555cc0639_0_7

Open questions::

- The console operator manages the downloads deployment as well. Do we disable the downloads deployment? Long term we want to move to CLI manager: https://github.com/openshift/enhancements/blob/6ae78842d4a87593c63274e02ac7a33cc7f296c3/enhancements/oc/cli-manager.md

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

Update the cluster-authentication-operator to not go degraded when it can’t determine the console url. This risks masking certain cases where we would want to raise an error to the admin, but the expectation is that this failure mode is rare.

Risk could be avoided by looking at ClusterVersion's enabledCapabilities to decide if missing Console was expected or not (unclear if the risk is high enough to be worth this amount of effort).

AC: Update the cluster-authentication-operator to not go degraded when console config CRD is missing and ClusterVersion config has Console in enabledCapabilities.

We need to continue to maintain specific areas within storage, this is to capture that effort and track it across releases.

Goals

- To allow OCP users and cluster admins to detect problems early and with as little interaction with Red Hat as possible.

- When Red Hat is involved, make sure we have all the information we need from the customer, i.e. in metrics / telemetry / must-gather.

- Reduce storage test flakiness so we can spot real bugs in our CI.

Requirements

| Requirement | Notes | isMvp? |

|---|---|---|

| Telemetry | No | |

| Certification | No | |

| API metrics | No | |

Out of Scope

n/a

Background, and strategic fit

With the expected scale of our customer base, we want to keep load of customer tickets / BZs low

Assumptions

Customer Considerations

Documentation Considerations

- Target audience: internal

- Updated content: none at this time.

Notes

In progress:

- CSI certification flakes a lot. We should fix it before we start testing migration.

- In progress (API server restarts...) https://bugzilla.redhat.com/show_bug.cgi?id=1865857

- Get local-storage-operator and AWS EBS CSI driver operator logs in must-gather (OLM-managed operators are not included there)

- In progress for LSO (must-gather script being included in image) https://bugzilla.redhat.com/show_bug.cgi?id=1756096

- CI flakes:

- Configurable timeouts for e2e tests

- Azure is slow and times out often

- Cinder times out formatting volumes

- AWS resize test times out

- Configurable timeouts for e2e tests

High prio:

- Env. check tool for VMware - users often mis-configure permissions there and blame OpenShift. If we had a tool they could run, it might report better errors.

- Should it be part of the installer?

- Spike exists

- Add / use cloud API call metrics

-

- Helps customers to understand why things are slow

- Helps build cop to understand a flake

- With a post-install step that filters data from Prometheus that’s still running in the CI job.

- Ideas:

- Cloud is throttling X% of API calls longer than Y seconds

- Attach / detach / provisioning / deletion / mount / unmount / resize takes longer than X seconds?

- Capture metrics of operations that are stuck and won’t finish.

- Sweep operation map from executioner???

- Report operation metric into the highest bucket after the bucket threshold (i.e. if 10minutes is the last bucket, report an operation into this bucket after 10 minutes and don’t wait for its completion)?

- Ask the monitoring team?

- Include in CSI drivers too.

- With alerts too

- Report events for cloud issues

- E.g. cloud API reports weird attach/provision error (e.g. due to outage)

- What volume plugins actually users use the most? https://issues.redhat.com/browse/STOR-324

Unsorted

- As the number of storage operators grows, it would be grafana board for storage operators

- CSI driver metrics (from CSI sidecars + the driver itself + its operator?)

- CSI migration?

- Get aggregated logs in cluster

- They're rotated too soon

- No logs from dead / restarted pods

- No tools to combine logs from multiple pods (e.g. 3 controller managers)

- What storage issues customers have? it was 22% of all issues.

- Insufficient docs?

- Probably garbage

- Document basic storage troubleshooting for our supports

- What logs are useful when, what log level to use

- This has been discussed during the GSS weekly team meeting; however, it would be beneficial to have this documented.

- Common vSphere errors, their debugging and fixing.

- Document sig-storage flake handling - not all failed [sig-storage] tests are ours

Epic Goal

- Update all images that we ship with OpenShift to the latest upstream releases and libraries.

- Exact content of what needs to be updated will be determined as new images are released upstream, which is not known at the beginning of OCP development work. We don't know what new features will be included and should be tested and documented. Especially new CSI drivers releases may bring new, currently unknown features. We expect that the amount of work will be roughly the same as in the previous releases. Of course, QE or docs can reject an update if it's too close to deadline and/or looks too big.

Traditionally we did these updates as bugfixes, because we did them after the feature freeze (FF). Trying no-feature-freeze in 4.12. We will try to do as much as we can before FF, but we're quite sure something will slip past FF as usual.

Why is this important?

- We want to ship the latest software that contains new features and bugfixes.

Acceptance Criteria

- CI - MUST be running successfully with tests automated

- Release Technical Enablement - Provide necessary release enablement details and documents.

There is a new driver release 5.0.0 since the last rebase that includes snapshot support:

https://github.com/kubernetes-sigs/ibm-vpc-block-csi-driver/releases/tag/v5.0.0

Rebase the driver on v5.0.0 and update the deployments in ibm-vpc-block-csi-driver-operator.

There are no corresponding changes in ibm-vpc-node-label-updater since the last rebase.

Background and Goal

Currently in OpenShift we do not support distributing hotfix packages to cluster nodes. In time-sensitive situations, a RHEL hotfix package can be the quickest route to resolving an issue.

Acceptance Criteria

- Under guidance from Red Hat CEE, customers can deploy RHEL hotfix packages to MachineConfigPools.

- Customers can easily remove the hotfix when the underlying RHCOS image incorporates the fix.

Before we ship OCP CoreOS layering in https://issues.redhat.com/browse/MCO-165 we need to switch the format of what is currently `machine-os-content` to be the new base image.

The overall plan is:

- Publish the new base image as `rhel-coreos-8` in the release image

- Also publish the new extensions container (https://github.com/openshift/os/pull/763) as `rhel-coreos-8-extensions`

- Teach the MCO to use this without also involving layering/build controller

- Delete old `machine-os-content`

We need something in our repo /docs that we can point people to that briefly explains how to use "layering features" via the MCO in OCP ( well, and with the understanding that OKD also uses the MCO ).

Maybe this ends up in its own repo like https://github.com/coreos/coreos-layering-examples eventually, maybe it doesn't.

I'm thinking something like https://github.com/openshift/machine-config-operator/blob/layering/docs/DemoLayering.md back from when we did the layering branch, but actually matching what we have in our main branch

This is separate but probably related to what Colin started in the Docs Tracker.

Feature Overview

- Follow up work for the new provider, Nutanix, to extend extisting capabilities with new ones

Goals

- Make Nutanix CSI Driver part of the CVO once the driver and the Operator has been open sourced by the vendor

- Enable IPI for disconnected environments

- Enable the UPI workflow

- Nutanix CCM for the Node Controller

- Enable Egress IP for the provider

Requirements

- This Section:* A list of specific needs or objectives that a Feature must deliver to satisfy the Feature.. Some requirements will be flagged as MVP. If an MVP gets shifted, the feature shifts. If a non MVP requirement slips, it does not shift the feature.

| Requirement | Notes | isMvp? |

|---|---|---|

| CI - MUST be running successfully with test automation | This is a requirement for ALL features. | YES |

| Release Technical Enablement | Provide necessary release enablement details and documents. | YES |

OCP/Telco Definition of Done

Epic Template descriptions and documentation.

<--- Cut-n-Paste the entire contents of this description into your new Epic --->

Epic Goal

- Allow users to have nutanix platfrom integration choice (similar to vsphere) from AI SaaS

Why is this important?

- Expend RH offering beyond IPI

Scenarios

- ...

Acceptance Criteria

- CI - MUST be running successfully with tests automated

- Release Technical Enablement - Provide necessary release enablement details and documents.

- ...

Dependencies (internal and external)

- ...

Previous Work (Optional):

- …

Open questions::

- …

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

Description of the problem:

BE 2.13.0, In Nutanix, UMN flow, If machine_network = [] , bootstrap validation failed.

How reproducible:

Trying to reproduce

Steps to reproduce:

1.

2.

3.

Actual results:

Expected results:

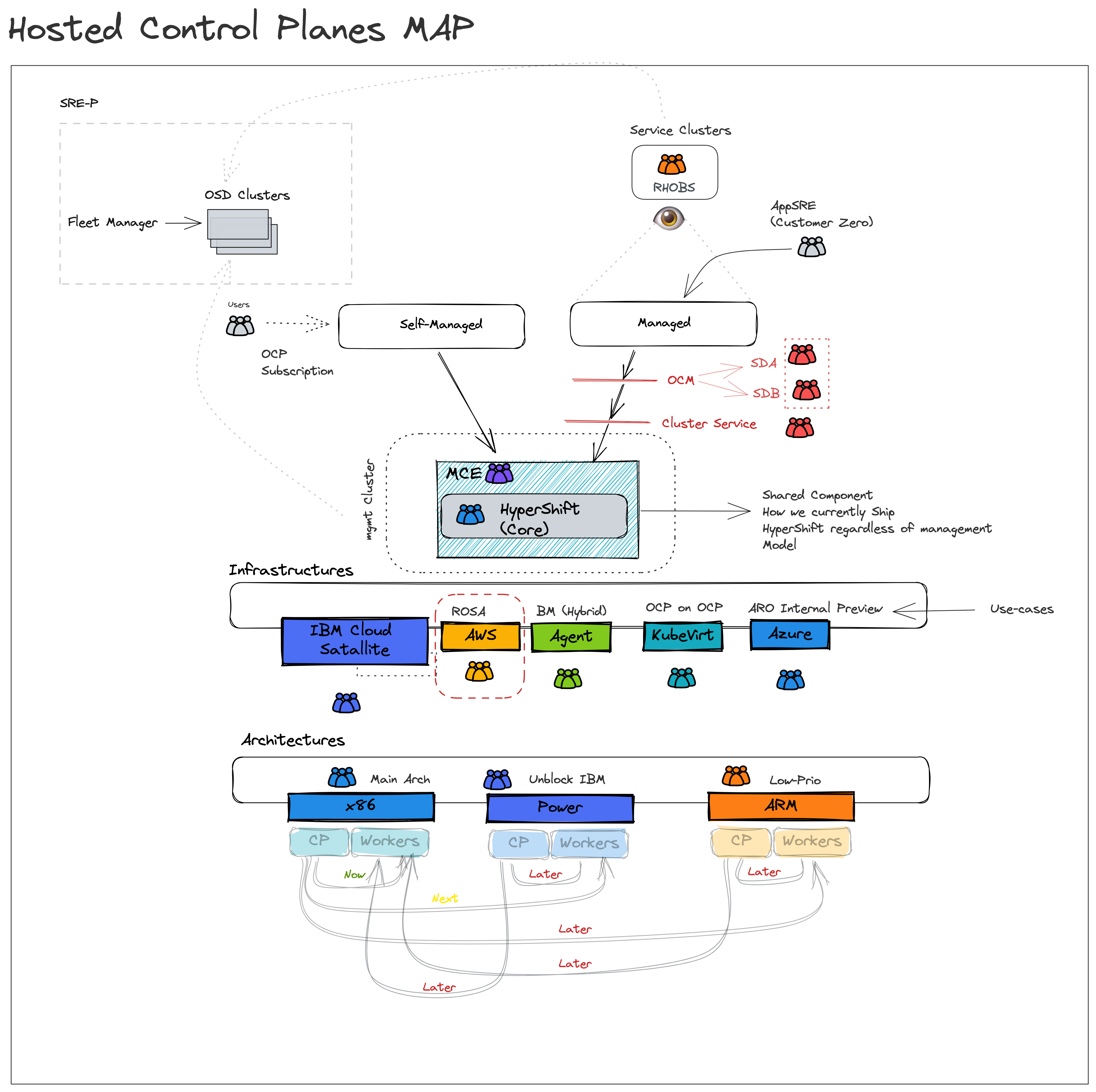

Why?

- Decouple control and data plane.

- Customers do not pay Red Hat more to run HyperShift control planes and supporting infrastructure than Standalone control planes and supporting infrastructure.

- Improve security

- Shift credentials out of cluster that support the operation of core platform vs workload

- Improve cost

- Allow a user to toggle what they don’t need.

- Ensure a smooth path to scale to 0 workers and upgrade with 0 workers.

Assumption

- A customer will be able to associate a cluster as “Infrastructure only”

- E.g. one option: management cluster has role=master, and role=infra nodes only, control planes are packed on role=infra nodes

- OR the entire cluster is labeled infrastructure , and node roles are ignored.

- Anything that runs on a master node by default in Standalone that is present in HyperShift MUST be hosted and not run on a customer worker node.

Doc: https://docs.google.com/document/d/1sXCaRt3PE0iFmq7ei0Yb1svqzY9bygR5IprjgioRkjc/edit

Epic Goal

- To improve debug-ability of ovn-k in hypershift

- To verify the stability of of ovn-k in hypershift

- To introduce a EgressIP reach-ability check that will work in hypershift

Why is this important?

- ovn-k is supposed to be GA in 4.12. We need to make sure it is stable, we know the limitations and we are able to debug it similar to the self hosted cluster.

Acceptance Criteria

- CI - MUST be running successfully with tests automated

Dependencies (internal and external)

- This will need consultation with the people working on HyperShift

Previous Work (Optional):

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

CNCC was moved to the management cluster and it should use proxy settings defined for the management cluster.

In testing dual stack on vsphere we discovered that kubelet will not allow us to specify two ips on any platform except baremetal. We have a couple of options to deal with that:

- Wait for https://github.com/kubernetes/enhancements/pull/3706 to merge and be implemented upstream. This almost certainly means we miss 4.13.

- Wait for https://github.com/kubernetes/enhancements/pull/3706 to merge and then implement the design downstream. This involves risk of divergence from the eventual upstream design. We would probably only ship this way as tech preview and provide support exceptions for major customers.

- Remove the setting of nodeip for kubelet. This should get around the limitation on providing dual IPs, but it means we're reliant on the default kubelet IP selection logic, which is...not good. We'd probably only be able to support this on single nic network configurations.

GA CgroupV2 in 4.13

Default with RHEL 9

- Day 0 support for 4.13 where customer is able to change V1(default) to V2

- Day 1 where customer is able to change v1(default) to V2

- documentation on migration

- Pinning existing clusters to V1 before upgrade to 4.13

From OCP - 4.13, the RCOS nodes by default come up with the "CGroupsV2" configuration

Command to verify on any OCP cluster node

stat -c %T -f /sys/fs/cgroup/

So, to avoid unexpected complications, if the `cgroupMode` is found to be empty in the `nodes.config` resource, `CGroupsv1` configuration needs to be explicitly set using the `machine-config-operator`

This user tracks the changes required to remove the TechPreview related checks in the MCO code to graduate the CGroupsV2 feature to GA.

Feature

As an Infrastructure Administrator, I want to deploy OpenShift on vSphere with supervisor (aka Masters) and worker nodes (from a MachineSet) across multiple vSphere data centers and multiple vSphere clusters using full stack automation (IPI) and user provided infrastructure (UPI).

MVP

Install OpenShift on vSphere using IPI / UPI in multiple vSphere data centers (regions) and multiple vSphere clusters in 1 vCenter, all in the same IPv4 subnet (in the same physical location).

- Kubernetes Region contains vSphere datacenter and (single) vCenter name

- Kubernetes Zone contains vSphere cluster, resource pool, datastore, network (port group)

Out of scope

- There are no support the conversion of a non-zonal configuration (i.e. an existing OpenShift installation without 1+ zones) to a zonal configuration (1+ zones), but zonal UPI installation by the Infrastructure Administrator is permitted.

Scenarios for consideration:

- OpenShift in vSphere across different zones to avoid single points of failure, whereby each node is in different ESX clusters within the same vSphere datacenter, but in different networks.

- OpenShift in vSphere across multiple vSphere datacenter, while ensuring workers and masters are spread across 2 different datacenter in different subnets. (

RFE-845,RFE-459).

Acceptance criteria:

- Ensure vSphere IPI can successfully be deployed with ODF across the 3 zones (vSphere clusters) within the same vCenter [like we do with AWS, GCP & Azure].

- Ensure zonal configuration in vSphere using UPI is documented and tested.

References:

Epic Goal*

We need SPLAT-594 to be reflected in our CSI driver operator to support vSphere topology of storage GA.

Why is this important? (mandatory)

See SPLAT-320.

Scenarios (mandatory)

As user, I want to edit Infrastructure object after OCP installation (or upgrade) to update cluster topology, so all newly provisioned PVs will get the new topology labels.

(With vSphere topology GA, we expect that users will be allowed to edit Infrastructure and change the cluster topology after cluster installation.)

Dependencies (internal and external) (mandatory)

- SPLAT: [vsphere] Support Multiple datacenters and clusters GA.

Our expectation is that teams would modify the list below to fit the epic. Some epics may not need all the default groups but what is included here should accurately reflect who will be involved in delivering the epic.

- Development -

- Documentation -

- QE -

- PX -

- Others -

Acceptance Criteria (optional)

Provide some (testable) examples of how we will know if we have achieved the epic goal.

Drawbacks or Risk (optional)

It's possible that Infrastructure will remain read-only. No code on Storage side is expected then.

Done - Checklist (mandatory)

- CI Testing - Basic e2e automationTests are merged and completing successfully

- Documentation - Content development is complete.

- QE - Test scenarios are written and executed successfully.

- Technical Enablement - Slides are complete (if requested by PLM)

- Engineering Stories Merged

- All associated work items with the Epic are closed

- Epic status should be “Release Pending”

When STOR-1145 is merged, make sure that these new metrics are reported via telemetry to us.

Exit criteria:

- verify that metrics are reported in telemetry? I am not sure we have capabilities to test that, all code will be in monitoring repos.

I was thinking we will probably need a detailed metric for topology information about the cluster. Such as - how many failure-domains, how many datacenter and how many datastores.

In a zonal deployments, it is possible that new failure-domains are added to the cluster.

In that case, we will have to most likely discover these new failure-domains and tag datastores in them, so as topology aware provisioning can work.

We should create a metric and an alert if both ClusterCSIDriver and Infra object specify a topology.

Although such configuration is supported and Infra object will take precedence but it indicates an user error and hence user should be alerted about them.

As an openshift engineer make changes to various openshift components so that vSphere zonal installation is considered GA.

As a openshift engineer implement a new job for upi zonal so that method of installation is tested.

As a openshift engineer create an additional UPI terraform for zonal so that it can be tested in CI.

As a openshift engineer depreciate existing vSphere platform spec parameters so that they can eventually be removed in favor of zonal.

As a openshift engineer I need to follow the process to move the api from tech preview to ga so it can be used by clusters not installed with TechPreviewNoUpgrade.

more to follow...

Feature Goal*

What is our purpose in implementing this? What new capability will be available to customers?

The goal of this feature is to provide a consistent, predictable and deterministic approach on how the default storage class(es) is managed.

Why is this important? (mandatory)

The current default storage class implementation has corner cases which can result in PVs staying in pending because there is either no default storage class OR multiple storage classes are defined

Scenarios (mandatory)

Provide details for user scenarios including actions to be performed, platform specifications, and user personas.

No default storage class

In some cases there is no default SC defined, this can happen during OCP deployment where components such as the registry request a PV whereas the SC are not been defined yet. This can also happen during a change in default SC, there won't be any between the admin unset the current one and set the new on.

- The admin marks the current default SC1 as non-default.

Another user creates PVC requesting a default SC, by leaving pvc.spec.storageClassName=nil. The default SC does not exist at this point, therefore the admission plugin leaves the PVC untouched with pvc.spec.storageClassName=nil.

The admin marks SC2 as default.

PV controller, when reconciling the PVC, updates pvc.spec.storageClassName=nil to the new SC2.

PV controller uses the new SC2 when binding / provisioning the PVC.

- The installer creates PVC for the image registry first, requesting the default storage class by leaving pvc.spec.storageClassName=nil.

The installer creates a default SC.

PV controller, when reconciling the PVC, updates pvc.spec.storageClassName=nil to the new default SC.

PV controller uses the new default SC when binding / provisioning the PVC.

Multiple Storage Classes

In some cases there are multiple default SC, this can be an admin mistake (forget to unset the old one) or during the period where a new default SC is created but the old one is still present.

New behavior:

- Create a default storage class A

- Create a default storage class B

- Create PVC with pvc.spec.storageCLassName = nil

-> the PVC will get the default storage class with the newest CreationTimestamp (i.e. B) and no error should show.

-> admin will get an alert that there are multiple default storage classes and they should do something about it.

CSI that are shipped as part of OCP

The CSI drivers we ship as part of OCP are deployed and managed by RH operators. These operators automatically create a default storage class. Some customers don't like this approach and prefer to:

- Create their own default storage class

- Have no default storage class in order to disable dynamic provisioning

Dependencies (internal and external) (mandatory)

What items must be delivered by other teams/groups to enable delivery of this epic.

No external dependencies.

Contributing Teams(and contacts) (mandatory)

Our expectation is that teams would modify the list below to fit the epic. Some epics may not need all the default groups but what is included here should accurately reflect who will be involved in delivering the epic.

- Development - STOR

- Documentation - STOR

- QE - STOR

- PX -

- Others -

Acceptance Criteria (optional)

Provide some (testable) examples of how we will know if we have achieved the epic goal.

Drawbacks or Risk (optional)

Can bring confusion to customer as there is a change in the default behavior customer are used to. This needs to be carefully documented.

Done - Checklist (mandatory)

The following points apply to all epics and are what the OpenShift team believes are the minimum set of criteria that epics should meet for us to consider them potentially shippable. We request that epic owners modify this list to reflect the work to be completed in order to produce something that is potentially shippable.

- CI Testing - Basic e2e automationTests are merged and completing successfully

- Documentation - Content development is complete.

- QE - Test scenarios are written and executed successfully.

- Technical Enablement - Slides are complete (if requested by PLM)

- Engineering Stories Merged

- All associated work items with the Epic are closed

- Epic status should be “Release Pending”

- https://github.com/openshift/alibaba-disk-csi-driver-operator/pull/41

- https://github.com/openshift/aws-ebs-csi-driver-operator/pull/173

- https://github.com/openshift/azure-disk-csi-driver-operator/pull/63

- https://github.com/openshift/azure-file-csi-driver-operator/pull/42

- https://github.com/openshift/gcp-pd-csi-driver-operator/pull/58

- https://github.com/openshift/ibm-vpc-block-csi-driver-operator/pull/48

- https://github.com/openshift/openstack-cinder-csi-driver-operator/pull/103

- https://github.com/openshift/ovirt-csi-driver-operator/pull/111

- https://github.com/openshift/vmware-vsphere-csi-driver-operator/pull/126

Feature Overview

Much like core OpenShift operators, a standardized flow exists for OLM-managed operators to interact with the cluster in a specific way to leverage AWS STS authorization when using AWS APIs as opposed to insecure static, long-lived credentials. OLM-managed operators can implement integration with the CloudCredentialOperator in well-defined way to support this flow.

Goals:

Enable customers to easily leverage OpenShift's capabilities around AWS STS with layered products, for increased security posture. Enable OLM-managed operators to implement support for this in well-defined pattern.

Requirements:

- CCO gets a new mode in which it can reconcile STS credential request for OLM-managed operators

- A standardized flow is leveraged to guide users in discovering and preparing their AWS IAM policies and roles with permissions that are required for OLM-managed operators

- A standardized flow is defined in which users can configure OLM-managed operators to leverage AWS STS

- An example operator is used to demonstrate the end2end functionality

- Clear instructions and documentation for operator development teams to implement the required interaction with the CloudCredentialOperator to support this flow

Use Cases:

See Operators & STS slide deck.

Out of Scope:

- handling OLM-managed operator updates in which AWS IAM permission requirements might change from one version to another (which requires user awareness and intervention)

Background:

The CloudCredentialsOperator already provides a powerful API for OpenShift's cluster core operator to request credentials and acquire them via short-lived tokens. This capability should be expanded to OLM-managed operators, specifically to Red Hat layered products that interact with AWS APIs. The process today is cumbersome to none-existent based on the operator in question and seen as an adoption blocker of OpenShift on AWS.

Customer Considerations

This is particularly important for ROSA customers. Customers are expected to be asked to pre-create the required IAM roles outside of OpenShift, which is deemed acceptable.

Documentation Considerations

- Internal documentation needs to exists to guide Red Hat operator developer teams on the requirements and proposed implementation of integration with CCO and the proposed flow

- External documentation needs to exist to guide users on:

- how to become aware that the cluster is in STS mode

- how to become aware of operators that support STS and the proposed CCO flow

- how to become aware of the IAM permissions requirements of these operators

- how to configure an operator in the proposed flow to interact with CCO

Interoperability Considerations

- this needs to work with ROSA

- this needs to work with self-managed OCP on AWS

Market Problem

This Section: High-Level description of the Market Problem ie: Executive Summary

- As a customer of OpenShift layered products, I need to be able to fluidly, reliably and consistently install and use OpenShift layered product Kubernetes Operators into my ROSA STS clusters, while keeping a STS workflow throughout.

- As a customer of OpenShift on the big cloud providers, overall I expect OpenShift as a platform to function equally well with tokenized cloud auth as it does with "mint-mode" IAM credentials. I expect the same from the Kubernetes Operators under the Red Hat brand (that need to reach cloud APIs) in that tokenized workflows are equally integrated and workable as with "mint-mode" IAM credentials.

- As the managed services, including Hypershift teams, offering a downstream opinionated, supported and managed lifecycle of OpenShift (in the forms of ROSA, ARO, OSD on GCP, Hypershift, etc), the OpenShift platform should have as close as possible, native integration with core platform operators when clusters use tokenized cloud auth, driving the use of layered products.

- .

- As the Hypershift team, where the only credential mode for clusters/customers is STS (on AWS) , the Red Hat branded Operators that must reach the AWS API, should be enabled to work with STS credentials in a consistent, and automated fashion that allows customer to use those operators as easily as possible, driving the use of layered products.

Why it Matters

- Adding consistent, automated layered product integrations to OpenShift would provide great added value to OpenShift as a platform, and its downstream offerings in Managed Cloud Services and related offerings.

- Enabling Kuberenetes Operators (at first, Red Hat ones) on OpenShift for the "big3" cloud providers is a key differentiation and security requirement that our customers have been and continue to demand.

- HyperShift is an STS-only architecture, which means that if our layered offerings via Operators cannot easily work with STS, then it would be blocking us from our broad product adoption goals.

Illustrative User Stories or Scenarios

- Main success scenario - high-level user story

- customer creates a ROSA STS or Hypershift cluster (AWS)

- customer wants basic (table-stakes) features such as AWS EFS or RHODS or Logging

- customer sees necessary tasks for preparing for the operator in OperatorHub from their cluster

- customer prepares AWS IAM/STS roles/policies in anticipation of the Operator they want, using what they get from OperatorHub

- customer's provides a very minimal set of parameters (AWS ARN of role(s) with policy) to the Operator's OperatorHub page

- The cluster can automatically setup the Operator, using the provided tokenized credentials and the Operator functions as expected

- Cluster and Operator upgrades are taken into account and automated

- The above steps 1-7 should apply similarly for Google Cloud and Microsoft Azure Cloud, with their respective token-based workload identity systems.

- Alternate flow/scenarios - high-level user stories

- The same as above, but the ROSA CLI would assist with AWS role/policy management

- The same as above, but the oc CLI would assist with cloud role/policy management (per respective cloud provider for the cluster)

- ...

Expected Outcomes

This Section: Articulates and defines the value proposition from a users point of view

- See SDE-1868 as an example of what is needed, including design proposed, for current-day ROSA STS and by extension Hypershift.

- Further research is required to accomodate the AWS STS equivalent systems of GCP and Azure

- Order of priority at this time is

- 1. AWS STS for ROSA and ROSA via HyperShift

- 2. Microsoft Azure for ARO

- 3. Google Cloud for OpenShift Dedicated on GCP

Effect

This Section: Effect is the expected outcome within the market. There are two dimensions of outcomes; growth or retention. This represents part of the “why” statement for a feature.

- Growth is the acquisition of net new usage of the platform. This can be new workloads not previously able to be supported, new markets not previously considered, or new end users not previously served.

- Retention is maintaining and expanding existing use of the platform. This can be more effective use of tools, competitive pressures, and ease of use improvements.

- Both of growth and retention are the effect of this effort.

- Customers have strict requirements around using only token-based cloud credential systems for workloads in their cloud accounts, which include OpenShift clusters in all forms.

- We gain new customers from both those that have waited for token-based auth/auth from OpenShift and from those that are new to OpenShift, with strict requirements around cloud account access

- We retain customers that are going thru both cloud-native and hybrid-cloud journeys that all inevitably see security requirements driving them towards token-based auth/auth.

- Customers have strict requirements around using only token-based cloud credential systems for workloads in their cloud accounts, which include OpenShift clusters in all forms.

References

As an engineer I want the capability to implement CI test cases that run at different intervals, be it daily, weekly so as to ensure downstream operators that are dependent on certain capabilities are not negatively impacted if changes in systems CCO interacts with change behavior.

Acceptance Criteria:

Create a stubbed out e2e test path in CCO and matching e2e calling code in release such that there exists a path to tests that verify working in an AWS STS workflow.

OC mirror is GA product as of Openshift 4.11 .

The goal of this feature is to solve any future customer request for new features or capabilities in OC mirror

In 4.12 release, a new feature was introduced to oc-mirror allowing it to use OCI FBC catalogs as starting point for mirroring operators.

Overview

As a oc-mirror user, I would like the OCI FBC feature to be stable

so that I can use it in a production ready environment

and to make the new feature and all existing features of oc-mirror seamless

Current Status

This feature is ring-fenced in the oc mirror repository, it uses the following flags to achieve this so as not to cause any breaking changes in the current oc-mirror functionality.

- --use-oci-feature

- --oci-feature-action (copy or mirror)

- --oci-registries-config

The OCI FBC (file base container) format has been delivered for Tech Preview in 4.12

Tech Enablement slides can be found here https://docs.google.com/presentation/d/1jossypQureBHGUyD-dezHM4JQoTWPYwiVCM3NlANxn0/edit#slide=id.g175a240206d_0_7

Design doc is in https://docs.google.com/document/d/1-TESqErOjxxWVPCbhQUfnT3XezG2898fEREuhGena5Q/edit#heading=h.r57m6kfc2cwt (also contains latest design discussions around the stories of this epic)

Link to previous working epic https://issues.redhat.com/browse/CFE-538

Contacts for the OCI FBC feature

- Sherine Khoury

- Luigi Mario Zuccarelli

- IBM John Hunkins

- CFE PM Heather Heffner

- WRKLDS PM Tomas Smetana

As IBM user, I'd like to be able to specify the destination of the OCI FBC catalog in ImageSetConfig

So that I can control where that image is pushed to on the disconnected destination registry, because the path on disk to that OCI catalog doesn't make sense to be used in the component paths of the destination catalog.

Expected Inputs and Outputs - Counter Proposal

Examples provided assume that the current working directory is set to /tmp/cwdtest.

Instead of introducing a targetNamespace which is used in combination with targetName, this counter proposal introduces a targetCatalog field which supersedes the existing targetName field (which would be marked as deprecated). Users should transition from using targetName to targetCatalog, but if both happen to be specified, the targetCatalog is preferred and targetName is ignored. Any ISC that currently uses targetName alone should continue to be used as currently defined.

The rationale for targetCatalog is that some customers will have restrictions on where images can be placed. All IBM images always use a namespace. We therefore need a way to indicate where the CATALOG image is located within the context of the target registry... it can't just be placed in the root, so we need a way to configure this.

The targetCatalog field consists of an optional namespace followed by the target image name, described in extended Backus–Naur form below:

target-catalog = [namespace '/'] target-name target-name = path-component namespace = path-component ['/' path-component]* path-component = alpha-numeric [separator alpha-numeric]* alpha-numeric = /[a-z0-9]+/ separator = /[_.]|__|[-]*/

The target-name portion of targetCatalog represents the the image name in the final destination registry, and matches the definition/purpose of the targetName field. The namespace is only used for "placement" of the catalog image into the right "hierarchy" in the target registry. The target-name portion will be used in the catalog source metadata name, the file name of the catalog source, and target image reference.

Examples:

- with namespace:

targetCatalog: foo/bar/baz/ibm-zcon-zosconnect-example

- without namespace:

targetCatalog: ibm-zcon-zosconnect-example

Simple Flow

FBC image from docker registry

Command:

oc mirror -c /Users/jhunkins/go/src/github.com/jchunkins/oc-mirror/ImageSetConfiguration.yml --dest-skip-tls --dest-use-http docker://localhost:5000

ISC

/Users/jhunkins/go/src/github.com/jchunkins/oc-mirror/ImageSetConfiguration.yml

kind: ImageSetConfiguration apiVersion: mirror.openshift.io/v1alpha2 storageConfig: local: path: /tmp/localstorage mirror: operators: - catalog: icr.io/cpopen/ibm-zcon-zosconnect-catalog@sha256:6f02ecef46020bcd21bdd24a01f435023d5fc3943972ef0d9769d5276e178e76

ICSP

/tmp/cwdtest/oc-mirror-workspace/results-1675716807/imageContentSourcePolicy.yaml

apiVersion: operator.openshift.io/v1alpha1 kind: ImageContentSourcePolicy metadata: labels: operators.openshift.org/catalog: "true" name: operator-0 spec: repositoryDigestMirrors: - mirrors: - localhost:5000/cpopen source: icr.io/cpopen - mirrors: - localhost:5000/openshift4 source: registry.redhat.io/openshift4

CatalogSource

/tmp/cwdtest/oc-mirror-workspace/results-1675716807/catalogSource-ibm-zcon-zosconnect-catalog.yaml

apiVersion: operators.coreos.com/v1alpha1 kind: CatalogSource metadata: name: ibm-zcon-zosconnect-catalog namespace: openshift-marketplace spec: image: localhost:5000/cpopen/ibm-zcon-zosconnect-catalog:6f02ec sourceType: grpc

Simple Flow With Target Namespace

FBC image from docker registry (putting images into a destination "namespace")

Command:

oc mirror -c /Users/jhunkins/go/src/github.com/jchunkins/oc-mirror/ImageSetConfiguration.yml --dest-skip-tls --dest-use-http docker://localhost:5000/foo

ISC

/Users/jhunkins/go/src/github.com/jchunkins/oc-mirror/ImageSetConfiguration.yml

kind: ImageSetConfiguration apiVersion: mirror.openshift.io/v1alpha2 storageConfig: local: path: /tmp/localstorage mirror: operators: - catalog: icr.io/cpopen/ibm-zcon-zosconnect-catalog@sha256:6f02ecef46020bcd21bdd24a01f435023d5fc3943972ef0d9769d5276e178e76

ICSP

/tmp/cwdtest/oc-mirror-workspace/results-1675716807/imageContentSourcePolicy.yaml

apiVersion: operator.openshift.io/v1alpha1 kind: ImageContentSourcePolicy metadata: labels: operators.openshift.org/catalog: "true" name: operator-0 spec: repositoryDigestMirrors: - mirrors: - localhost:5000/foo/cpopen source: icr.io/cpopen - mirrors: - localhost:5000/foo/openshift4 source: registry.redhat.io/openshift4

CatalogSource

/tmp/cwdtest/oc-mirror-workspace/results-1675716807/catalogSource-ibm-zcon-zosconnect-catalog.yaml

apiVersion: operators.coreos.com/v1alpha1 kind: CatalogSource metadata: name: ibm-zcon-zosconnect-catalog namespace: openshift-marketplace spec: image: localhost:5000/foo/cpopen/ibm-zcon-zosconnect-catalog:6f02ec sourceType: grpc

Simple Flow With TargetCatalog / TargetTag

FBC image from docker registry (overriding the catalog name and tag)

Command:

oc mirror -c /Users/jhunkins/go/src/github.com/jchunkins/oc-mirror/ImageSetConfiguration.yml --dest-skip-tls --dest-use-http docker://localhost:5000

ISC

/Users/jhunkins/go/src/github.com/jchunkins/oc-mirror/ImageSetConfiguration.yml

kind: ImageSetConfiguration apiVersion: mirror.openshift.io/v1alpha2 storageConfig: local: path: /tmp/localstorage mirror: operators: - catalog: icr.io/cpopen/ibm-zcon-zosconnect-catalog@sha256:6f02ecef46020bcd21bdd24a01f435023d5fc3943972ef0d9769d5276e178e76 targetCatalog: cpopen/ibm-zcon-zosconnect-example # NOTE: namespace now has to be provided along with the # target catalog name to preserve the namespace in the resulting image targetTag: v123

ICSP

/tmp/cwdtest/oc-mirror-workspace/results-1675716807/imageContentSourcePolicy.yaml

apiVersion: operator.openshift.io/v1alpha1 kind: ImageContentSourcePolicy metadata: labels: operators.openshift.org/catalog: "true" name: operator-0 spec: repositoryDigestMirrors: - mirrors: - localhost:5000/cpopen source: icr.io/cpopen - mirrors: - localhost:5000/openshift4 source: registry.redhat.io/openshift4

CatalogSource

/tmp/cwdtest/oc-mirror-workspace/results-1675716807/catalogSource-ibm-zcon-zosconnect-example.yaml

apiVersion: operators.coreos.com/v1alpha1 kind: CatalogSource metadata: name: ibm-zcon-zosconnect-example namespace: openshift-marketplace spec: image: localhost:5000/cpopen/ibm-zcon-zosconnect-example:v123 sourceType: grpc

OCI Flow

FBC image from OCI path

In this example we're suggesting the use of a targetCatalog field.

Command:

oc mirror -c /Users/jhunkins/go/src/github.com/jchunkins/oc-mirror/ImageSetConfiguration.yml --dest-skip-tls --dest-use-http --use-oci-feature docker://localhost:5000

ISC

/Users/jhunkins/go/src/github.com/jchunkins/oc-mirror/ImageSetConfiguration.yml

kind: ImageSetConfiguration apiVersion: mirror.openshift.io/v1alpha2 storageConfig: local: path: /tmp/localstorage mirror: operators: - catalog: oci:///foo/bar/baz/ibm-zcon-zosconnect-catalog/amd64 # This is just a path to the catalog and has no special meaning targetCatalog: foo/bar/baz/ibm-zcon-zosconnect-example # <--- REQUIRED when using OCI and optional for docker images # value is used within the context of the target registry # targetTag: v123 # <--- OPTIONAL

ICSP

/tmp/cwdtest/oc-mirror-workspace/results-1675716807/imageContentSourcePolicy.yaml

apiVersion: operator.openshift.io/v1alpha1 kind: ImageContentSourcePolicy metadata: labels: operators.openshift.org/catalog: "true" name: operator-0 spec: repositoryDigestMirrors: - mirrors: - localhost:5000/cpopen source: icr.io/cpopen - mirrors: - localhost:5000/openshift4 source: registry.redhat.io/openshift4

CatalogSource

/tmp/cwdtest/oc-mirror-workspace/results-1675716807/catalogSource-ibm-zcon-zosconnect-example.yaml

apiVersion: operators.coreos.com/v1alpha1 kind: CatalogSource metadata: name: ibm-zcon-zosconnect-example namespace: openshift-marketplace spec: image: localhost:5000/foo/bar/baz/ibm-zcon-zosconnect-example:6f02ec # Example uses "targetCatalog" set to # "foo/bar/baz/ibm-zcon-zosconnect-example" at the # destination registry localhost:5000 sourceType: grpc

OCI Flow With Namespace

FBC image from OCI path (putting images into a destination "namespace" named "abc")

Command:

oc mirror -c /Users/jhunkins/go/src/github.com/jchunkins/oc-mirror/ImageSetConfiguration.yml --dest-skip-tls --dest-use-http --use-oci-feature docker://localhost:5000/abc

ISC

/Users/jhunkins/go/src/github.com/jchunkins/oc-mirror/ImageSetConfiguration.yml

kind: ImageSetConfiguration apiVersion: mirror.openshift.io/v1alpha2 storageConfig: local: path: /tmp/localstorage mirror: operators: - catalog: oci:///foo/bar/baz/ibm-zcon-zosconnect-catalog/amd64 # This is just a path to the catalog and has no special meaning targetCatalog: foo/bar/baz/ibm-zcon-zosconnect-example # <--- REQUIRED when using OCI and optional for docker images # value is used within the context of the target registry # targetTag: v123 # <--- OPTIONAL

ICSP

/tmp/cwdtest/oc-mirror-workspace/results-1675716807/imageContentSourcePolicy.yaml

apiVersion: operator.openshift.io/v1alpha1 kind: ImageContentSourcePolicy metadata: labels: operators.openshift.org/catalog: "true" name: operator-0 spec: repositoryDigestMirrors: - mirrors: - localhost:5000/abc/cpopen source: icr.io/cpopen - mirrors: - localhost:5000/abc/openshift4 source: registry.redhat.io/openshift4

CatalogSource

/tmp/cwdtest/oc-mirror-workspace/results-1675716807/catalogSource-ibm-zcon-zosconnect-example-catalog.yaml

apiVersion: operators.coreos.com/v1alpha1 kind: CatalogSource metadata: name: ibm-zcon-zosconnect-example namespace: openshift-marketplace spec: image: localhost:5000/abc/foo/bar/baz/ibm-zcon-zosconnect-example:6f02ec # Example uses "targetCatalog" set to # "foo/bar/baz/ibm-zcon-zosconnect-example" at the # destination registry localhost:5000/abc sourceType: grpc

As IBM, I would like to use oc-mirror with the --use-oci-feature flag and ImageSetConfigs containing OCI-FBC operator catalogs to mirror these catalogs to a connected registry

so that , regarding OCI FBC catalog:

- all bundles specified in the ImageSetConfig and their related images are mirrored from their source registry to the destination registry

- and the catalogs are mirrored from the local disk to the destination registry

- and the ImageContentSourcePolicy and CatalogSource files are generated correctly

and that regarding releases, additional images, helm charts:

- The images that are selected for mirroring are mirrored to the destination registry using the MirrorToMirror workflow

As an oc-mirror user I want a well documented and intuitive process

so that I can effectively and efficiently deliver image artifacts in both connected and disconnected installs with no impact on my current workflow

Glossary:

- OCI-FBC operator catalog: catalog image in oci format saved to disk, referenced with oci://path-to-image

- registry based operator catalog: catalog image hosted on a container registry.

References:

Acceptance criteria:

- No regression on oc-mirror use cases that are not using OCI-FBC feature

- mirrorToMirror use case with oci feature flag should be successful when all operator catalogs in ImageSetConfig are OCI-FBC:

- oc-mirror -c config.yaml docker://remote-registry --use-oci-feature succeeds

- All release images, helm charts, additional images are mirrored to the remote-registry in an incremental manner (only new images are mirrored based on contents of the storageConfig)

- All catalogs OCI-FBC, selected bundles and their related images are mirrored to the remote-registry and corresponding catalogSource and ImageSourceContentPolicy generated

- All registry based catalogs, selected bundles and their related images are mirrored to the remote-registry and corresponding catalogSource and ImageSourceContentPolicy generated

- mirrorToDisk use case with the oci feature flag is forbidden. The following command should fail:

- oc-mirror --from=seq_xx_tar docker://remote-registry --use-oci-feature

- diskToMirror use case with oci feature flag is forbidden. The following command should fail:

- oc-mirror --config=isc.yaml file://file-dir --use-oci-feature

WHAT

Refer engineering notes document https://docs.google.com/document/d/1zZ6FVtgmruAeBoUwt4t_FoZH2KEm46fPitUB23ifboY/edit#heading=h.6pw5r5w2r82 steps 2-7

Acceptance Criteria

- Code clean up and formating into functions

- Ensure good commenting

- Implement correct code functionality

- Ensure to oci mirrorTomirror functionality works correctly

- Update unit tests

Feature Overview

Customers are asking for improvements to the upgrade experience (both over-the-air and disconnected). This is a feature tracking epics required to get that work done.

Goals

- Have an option to do upgrades in more discrete steps under admin control. Specifically, these steps are:

- Control plane upgrade

- Worker nodes upgrade

- Workload enabling upgrade (i..e. Router, other components) or infra nodes

- Better visibility into any errors during the upgrades and documentation of what they error means and how to recover.

- An user experience around an end-2-end back-up and restore after a failed upgrade

OTA-810- Better Documentation:- Backup procedures before upgrades.

- More control over worker upgrades (with tagged pools between user Vs admin)

- The kinds of pre-upgrade tests that are run, the errors that are flagged and what they mean and how to address them.

- Better explanation of each discrete step in upgrades, and what each CVO Operator is doing and potential errors, troubleshooting and mitigating actions.

References

OCP/Telco Definition of Done

Epic Template descriptions and documentation.

<--- Cut-n-Paste the entire contents of this description into your new Epic --->

Epic Goal

- Revamp our Upgrade Documentation to include an appropriate level of detail for admins

Why is this important?

- Currently Admins have nothing which explains to them how upgrades actually work and as a result when things don't go perfectly they panic

- We do not sufficiently, or at least within context of Upgrade Docs, explain the differences between Degraded and Available statuses

- We do not explain order of operations

- We do not explain protections built into the platform which protect against total cluster failure, ie halting when components do not return to healthy state within exp

Scenarios

- Move out channel management to its own chapter

- Explain or link to existing documentation which addresses the differences between Degraded=True and Available=False

- Explain Upgradeable=False conditions and other aspects of upgrade preflight strategy that Operators should be indicating when its unsafe to upgrade

- Explain basics of how the upgrade is applied

- CVO fetches release image

- CVO updates operators in the following order

- Each operator is expected to monitor for success

- Provide example ordering of manifests and command to extract release specific manifests and infer the ordering

- Explain how operators indicate problems and generic processes for investigating them

- Explain the special role of MCO and MCP mechanisms such as pausing pools

- Provide some basic guidance for Control Plane duration, that is exclude worker pool rollout duration (90-120 minutes is normal)

Acceptance Criteria

- CI - MUST be running successfully with tests automated

- Release Technical Enablement - Provide necessary release enablement details and documents.

- ...

Dependencies (internal and external)

- ...

Previous Work (Optional):

- There was an effort to write up how to use MachineConfig Pools to partition and optimize worker rollout in https://issues.redhat.com/browse/OTA-375

Open questions::

- …

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

The CVO README is currently aimed at CVO devs. But there are way more CVO consumers than there are CVO devs. We should aim the README at "what does the CVO do for my clusters?", and push the dev docs down under docs/dev/.

Feature Overview

- Support OpenShift to be deployed on AWS Local Zones

Goals

- Support OpenShift to be deployed from day-0 on AWS Local Zones

- Support an existing OpenShift cluster to deploy compute Nodes on AWS Local Zones (day-2)

AWS Local Zones support - feature delivered in phases:

- Phase 0 (

OCPPLAN-9630): Document how to create compute nodes on AWS Local Zones in day-0 (SPLAT-635) - Phase 1 (

OCPBU-2): Create edge compute pool to generate MachineSets for node with NoSchedule taints when installing a cluster in existing VPC with AWS Local Zone subnets (SPLAT-636) - Phase 2 (

OCPBU-351): Installer automates network resources creation on Local Zone based on the edge compute pool (SPLAT-657)

Requirements

- This Section:* A list of specific needs or objectives that a Feature must deliver to satisfy the Feature.. Some requirements will be flagged as MVP. If an MVP gets shifted, the feature shifts. If a non MVP requirement slips, it does not shift the feature.

| Requirement | Notes | isMvp? |

|---|---|---|

| CI - MUST be running successfully with test automation | This is a requirement for ALL features. | YES |

| Release Technical Enablement | Provide necessary release enablement details and documents. | YES |

Epic Goal

- Admins can create compute pool named `edge` on the AWS platform to setting up Local Zone MachineSets.

- Admins can select and configure subnets on Local Zones before cluster creation.

- Ensure the installer allows creating a new machine pool for `edge` workloads

- Ensure the installer can create the MachineSet with `NoSchedule` taints on edge machine pools.

- Ensure Local Zone subnets will not be used on `worker` compute pools or control planes.

- Ensure the Wavelength zone will not be used in any compute pool

- Ensure the Cluster Network MTU manifest is created when Local Zone subnets are added when installing a cluster in existing VPC

Why is this important?

- …

Scenarios

User Stories

- As a cluster admin, I want the ability to specify a set of subnets on the AWS

Local Zone locations to deploy worker nodes, so I can further create custom

applications to deliver low latency to my end users.

- As a cluster admin, I would like to create a cluster extending worker nodes to

the edge of the AWS cloud provider with Local Zones, so I can further create

custom applications to deliver low latency to my end users.

- As a cluster admin, I would like to select existing subnets from the local and

the parent region zones, to install a cluster, so I can manage networks with

my automation.

- As a cluster admin, I would like to install OpenShift clusters, extending the

compute nodes to the Local Zones in my day zero operations without needing to

set up the network and compute dependencies, so I can speed up the edge adoption

in my organization using OKD/OCP.

Acceptance Criteria

- The enhancement must be accepted and merged

- Release Technical Enablement - Provide necessary release enablement details and documents.

- The installer implementation must be merged

Dependencies (internal and external)

Previous Work (Optional):

Open questions::

- …

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

Feature Overview

- Support deploying OCP in “GCP Service Project” while networks are defined in “GCP Host Project”.

- Enable OpenShift IPI Installer to deploy OCP in “GCP Service Project” while networks are defined in “GCP Host Project”

- “GCP Service Project” is from where the OpenShift installer is fired.

- “GCP host project” is the target project where the deployment of the OCP machines are done.

- Customer using shared VPC and have a distributed network spanning across the projects.

Goals

- As a user, I want to be able to deploy OpenShift on Google Cloud using XPN, where networks and other resources are deployed in a shared "Host Project" while the user bootstrap the installation from a "Sevice Project" so that I can follow Google's architecture best practices

Requirements

- This Section:* A list of specific needs or objectives that a Feature must deliver to satisfy the Feature.. Some requirements will be flagged as MVP. If an MVP gets shifted, the feature shifts. If a non MVP requirement slips, it does not shift the feature.

| Requirement | Notes | isMvp? |

|---|---|---|

| CI - MUST be running successfully with test automation | This is a requirement for ALL features. | YES |

| Release Technical Enablement | Provide necessary release enablement details and documents. | YES |

Documentation Considerations

Questions to be addressed:

- What educational or reference material (docs) is required to support this product feature? For users/admins? Other functions (security officers, etc)?

- Does this feature have doc impact?

- New Content, Updates to existing content, Release Note, or No Doc Impact

- If unsure and no Technical Writer is available, please contact Content Strategy.

- What concepts do customers need to understand to be successful in [action]?

- How do we expect customers will use the feature? For what purpose(s)?

- What reference material might a customer want/need to complete [action]?

- Is there source material that can be used as reference for the Technical Writer in writing the content? If yes, please link if available.

- What is the doc impact (New Content, Updates to existing content, or Release Note)?

Epic Goal

- Enable OpenShift IPI Installer to deploy OCP to a shared VPC in GCP.

- The host project is where the VPC and subnets are defined. Those networks are shared to one or more service projects.

- Objects created by the installer are created in the service project where possible. Firewall rules may be the only exception.

- Documentation outlines the needed minimal IAM for both the host and service project.

Why is this important?

- Shared VPC's are a feature of GCP to enable granular separation of duties for organizations that centrally manage networking but delegate other functions and separation of billing. This is used more often in larger organizations where separate teams manage subsets of the cloud infrastructure. Enterprises that use this model would also like to create IPI clusters so that they can leverage the features of IPI. Currently organizations that use Shared VPC's must use UPI and implement the features of IPI themselves. This is repetative engineering of little value to the customer and an increased risk of drift from upstream IPI over time. As new features are built into IPI, organizations must become aware of those changes and implement them themselves instead of getting them "for free" during upgrades.

Scenarios

- Deploy cluster(s) into service project(s) on network(s) shared from a host project.

Acceptance Criteria

- CI - MUST be running successfully with tests automated

- Release Technical Enablement - Provide necessary release enablement details and documents.

- ...

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

User Story:

As a developer, I want to be able to:

- specify a project for the public and private DNS managedZones

so that I can achieve

- enable DNS zones in alternate projects, such as the GCP XPN Host Project

Acceptance Criteria:

Description of criteria:

- cluster-ingress-operator can parse the project and zone name from the following format

- projects/project-id/managedZones/zoneid

- cluster-ingress-operator continues to accept names that are not relative resource names

- zoneid

(optional) Out of Scope:

All modifications to the openshift-installer is handled in other cards in the epic.

Engineering Details:

- https://github.com/openshift/api/blob/0ee1471bcabbfefade29abeae5aab53366d0493f/config/v1/types_dns.go#L43

- https://github.com/openshift/cluster-ingress-operator/blob/14f40c789e4e851aae2d812ea8e6fc1e70d9977f/pkg/dns/gcp/provider.go#L51-L60 oller.go#L562-L571

- https://cloud.google.com/dns/docs/reference/v1/managedZones/get#parameters

- https://cloud.google.com/apis/design/resource_names#relative_resource_name

Feature Overview

Allow users to interactively adjust the network configuration for a host after booting the agent ISO.

Goals

Configure network after host boots

The user has Static IPs, VLANs, and/or bonds to configure, but has no idea of the device names of the NICs. They don't enter any network config in agent-config.yaml. Instead they configure each host's network via the text console after it boots into the image.

Epic Goal

- Allow users to interactively adjust the network configuration for a host after booting the agent ISO, before starting processes that pull container images.

Why is this important?

- Configuring the network prior to booting a host is difficult and error-prone. Not only is the nmstate syntax fairly arcane, but the advent of 'predictable' interface names means that interfaces retain the same name across reboots but it is nearly impossible to predict what they will be. Applying configuration to the correct hosts requires correct knowledge and input of MAC addresses. All of these present opportunities for things to go wrong, and when they do the user is forced to return to the beginning of the process and generate a new ISO, then boot all of the hosts in the cluster with it again.

Scenarios

- The user has Static IPs, VLANs, and/or bonds to configure, but has no idea of the device names of the NICs. They don't enter any network config in agent-config.yaml. Instead they configure each host's network via the text console after it boots into the image.

- The user has Static IPs, VLANs, and/or bonds to configure, but makes an error entering the configuration in agent-config.yaml so that (at least) one host will not be able to pull container images from the release payload. They correct the configuration for that host via the text console before proceeding with the installation.

Acceptance Criteria

- CI - MUST be running successfully with tests automated

- Release Technical Enablement - Provide necessary release enablement details and documents.

- ...

Dependencies (internal and external)

- ...

Previous Work (Optional):

- …

Open questions::

- …

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

In the console service from AGENT-453, check whether we are able to pull the release image, and display this information to the user before prompting to run nmtui.

If we can access the image, then exit the service if there is no user input after some timeout, to allow the installation to proceed in the automation flow.

As a user, I need information about common misconfigurations that may be preventing the automated installation from proceeding.

If we are unable to access the release image from the registry, provide sufficient debugging information to the user to pinpoint the problem. Check for:

- DNS

- ping

- HTTP

- Registry login

- Release image

The openshift-install agent create image will need to fetch the agent-tui executable so that it could be embedded within the agent ISO. For this reason the agent-tui must be available in the release payload, so that it could be retrieved even when the command is invoked in a disconnected environment.

The node zero ip is currently hard-coded inside set-node-zero.sh.template and in the ServiceBaseURL template string.

ServiceBaseURL is also hard-coded inside:

- apply-host-config.service.template

- create-cluster-and-infraenv-service.template

- common.sh.template

- start-agent.sh.template

- start-cluster-installation.sh.template

- assisted-service.env.template

We need to remove this hard-coding and to allow a user to be able to set the node zero ip through the tui and have it be reflected by the agent services and scripts.

Currently the agent-tui displays always the additional checks (nslookup/ping/http get), even when the primary check (pull image) passes. This may cause some confusion to the user, due the fact that the additional checks do not prevent the agent-tui to complete successfully but they are just informative, to allow a better troubleshooting of the issue (so not needed in the positive case).

The additional checks should then be shown only when the primary check fails for any reason.

Right now all the connectivity checks are executed simultaneously, and it doesn't seem necessary especially in the positive scenario, ie when the release image can be pulled without any issue.

So, the connectivity related checks should be performed only when the release image is not accessible, to provide further infos to to the user.

The initial condition for allowing to continue (or not) the installation should be related then just to result of the primary check (right now, just the pull image) and not the secondary ones (http/dns/ping), that are just informative checks.

Note: this approach will also help to manage those cases where, currently, the release image can be pulled but the host doesn't answer to the ping

Enhance the openshift-install agent create image command so that the agent-nmtui executable will be embedded in the agent ISO

After having created the agent ISO, the agent-nmtui must be added to the ISO using the following approach:

1. Unpack the agent ISO in a temporary folder

2. Unpack the /images/ignition.img compressed cpio archive in a temporary folder

3. Create a new ignition.img compressed cpio archive by appending the agent-nmtui

2. Create a new agent ISO with the updated ignition.img

Implementation note

Portions of code from a PoC located at https://github.com/andfasano/gasoline could be re-used

When running the openshift-install agent create image command, first of all it needs to extract the agent-tui executable from the release payload in a temporary folder

When the agent-tui is shown during the initial host boot, if the pull release image check fails then an additional checks box is shown along with a details text view.

The content of the details view gets continuosly updated with the details of failed check, but the user cannot move the focus over the details box (using the arrow/tab keys), thus cannot scroll its content (using the up/down arrow keys)

Create a systemd service that runs at startup prior to the login prompt and takes over the console. This should start after the network-online target, and block the login prompt appearing until it exits.

This should also block, at least temporarily, any services that require pulling an image from the registry (i.e. agent + assisted-service).

OCP/Telco Definition of Done

Epic Template descriptions and documentation.

Epic Goal

- The goal of this epic to begin the process of expanding support of OpenShift on ppc64le hardware to include IPI deployments against the IBM Power Virtual Server (PowerVS) APIs.

Why is this important?

The goal of this initiative to help boost adoption of OpenShift on ppc64le. This can be further broken down into several key objectives.

- For IBM, furthering adopt of OpenShift will continue to drive adoption on their power hardware. In parallel, this can be used for existing customers to migrate their old power on-prem workloads to a cloud environment.

- For the Multi-Arch team, this represents our first opportunity to develop an IPI offering on one of the IBM platforms. Right now, we depend on IPI on libvirt to cover our CI needs; however, this is not a supported platform for customers. PowerVS would address this caveat for ppc64le.

- By bringing in PowerVS, we can provide customers with the easiest possible experience to deploy and test workloads on IBM architectures.

- Customers already have UPI methods to solve their OpenShift on prem needs for ppc64le. This gives them an opportunity for a cloud based option, further our hybrid-cloud story.

Scenarios

- As a user with a valid PowerVS account, I would like to provide those credentials to the OpenShift installer and get a full cluster up on IPI.

Technical Specifications

Some of the technical specifications have been laid out in MULTIARCH-75.

Acceptance Criteria

- CI - MUST be running successfully with tests automated

- Release Technical Enablement - Provide necessary release enablement details and documents.

Dependencies (internal and external)

- Images are built in the RHCOS pipeline and pushed in the OVA format to the IBM Cloud.

- Installer is extended to support PowerVS as a new platform.

- Machine and cluster APIs are updated to support PowerVS.

- A terraform provider is developed against the PowerVS APIs.

- A load balancing strategy is determined and made available.

- Networking details are sorted out.

Open questions::

- Load balancing implementation?

- Networking strategy given the lack of virtual network APIs in PowerVS.

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

Epic Goal

- Improve IPI on Power VS in the 4.13 cycle

Running doc to describe terminologies and concepts which are specific to Power VS - https://docs.google.com/document/d/1Kgezv21VsixDyYcbfvxZxKNwszRK6GYKBiTTpEUubqw/edit?usp=sharing

Recently, the image registry team decided with this change[1] that major cloud platforms cannot have `emptyDir` as the storage backend. IBMCloud uses ibmcos, which we would ideally need to do. There have been few issues identified with using ibmcos as is in the cluster image registry operator and some solutions identified here[2]. Basically, we would need the PowerVS platform to be supported for ibmcos and an API related to change to add resourceGroup in the infra API. This only affects 4.13 and is not an issue for 4.12.

[1] https://github.com/openshift/cluster-image-registry-operator/pull/820

BU Priority Overview

As our customers create more and more clusters, it will become vital for us to help them support their fleet of clusters. Currently, our users have to use a different interface(ACM UI) in order to manage their fleet of clusters. Our goal is to provide our users with a single interface for managing a fleet of clusters to deep diving into a single cluster. This means going to a single URL – your Hub – to interact with your OCP fleet.

Goals

The goal of this tech preview update is to improve the experience from the last round of tech preview. The following items will be improved:

- Improved Cluster Picker: Moved to Masthead for better usability, filter/search

- Support for Metrics: Metrics are now visualized from Spoke Clusters

- Avoid UI Mismatch: Dynamic Plugins from Spoke Clusters are disabled

- Console URLs Enhanced: Cluster Name Add to URL for Quick Links

- Security Improvements: Backend Proxy and Auth updates

An epic we can duplicate for each release to ensure we have a place to catch things we ought to be doing regularly but can tend to fall by the wayside.

As a developer I want a github pr template that allows me to provide:

- functionality explanation

- assignee

- screenshots or demo

- draft test cases

Key Objective

Providing our customers with a single simplified User Experience(Hybrid Cloud Console)that is extensible, can run locally or in the cloud, and is capable of managing the fleet to deep diving into a single cluster.

Why customers want this?

- Single interface to accomplish their tasks

- Consistent UX and patterns

- Easily accessible: One URL, one set of credentials

Why we want this?

- Shared code - improve the velocity of both teams and most importantly ensure consistency of the experience at the code level

- Pre-built PF4 components

- Accessibility & i18n

- Remove barriers for enabling ACM

Phase 2 Goal: Productization of the united Console

- Enable user to quickly change context from fleet view to single cluster view

- Add Cluster selector with “All Cluster” Option. “All Cluster” = ACM

- Shared SSO across the fleet

- Hub OCP Console can connect to remote clusters API

- When ACM Installed the user starts from the fleet overview aka “All Clusters”

- Share UX between views

- ACM Search —> resource list across fleet -> resource details that are consistent with single cluster details view

- Add Cluster List to OCP —> Create Cluster

As a Dynamic Plugin developer I would render version of my Dynamic plugin in the About modal. For that we would need to check the `

LoadedDynamicPluginInfo` instances. There we need to check the `metadata.name` and `metadata.version` that we need to surface to the About modal.

AC: Render name and version for each Dynamic Plugin into the About modal.

Original description: When ACM moved to the unified console experience, we lost the ability in our standalone console to display our version information in our own About modal. We would like to be able to add our product and version information into the OCP About modal.

In order for hub cluster console OLM screens to behave as expected in a multicluster environment, we need to gather "copiedCSVsDisabled" flags from managed clusters so that the console backend/frontend can consume this information.

AC:

- The console operator syncs "copiedCSVsDisabled" flags from managed clusters into the hub cluster managed cluster config.

Mock a multicluster environment in our CI using Cypress, without provisioning multiple clusters using a combination of cy.intercept and updating window.SERVER flags in the before section of the test scenarios.

Acceptance Criteria:

Without provisioning additional clusters:

- mock server flags to render a cluster dropdown

- mock sample pod data for a fictional cluster

Installed operators, operator details, operand details, and operand create pages should work as expected in a multicluster environment when copied CSVs are disabled on any cluster in the fleet.

AC:

- Console backend consumes "copiedCSVsDisabled" flags for each cluster in the fleet

- Frontend handles copiedCSVsDisabled behavior "per-cluster" and OLM pages work as expected no matter which cluster is selected

Description of problem:

When viewing a resource that exists for multiple clusters, the data may be from the wrong cluster for a short time after switching clusters using the multicluster switcher.