Complete Features

These features were completed when this image was assembled

This outcome tracks the overall CoreOS Layering story as well as the technical items needed to converge CoreOS with RHEL image mode. This will provide operational consistency across the platforms.

ROADMAP for this Outcome: https://docs.google.com/document/d/1K5uwO1NWX_iS_la_fLAFJs_UtyERG32tdt-hLQM8Ow8/edit?usp=sharing

Note: phase 2 target is tech preview.

Feature Overview

In the initial delivery of CoreOS Layering, it is required that administrators provide their own build environment to customize RHCOS images. That could be a traditional RHEL environment or potentially an enterprising administrator with some knowledge of OCP Builds could set theirs up on-cluster.

The primary virtue of an on-cluster build path is to continue using the cluster to manage the cluster. No external dependency, batteries-included.

On-cluster, automated RHCOS Layering builds are important for multiple reasons:

- One-click/one-command upgrades of OCP are very popular. Many customers may want to make one or just a few customizations but also want to keep that simplified upgrade experience.

- Customers who only need to customize RHCOS temporarily (hotfix, driver test package, etc) will find off-cluster builds to be too much friction for one driver.

- One of OCP's virtues is that the platform and OS are developed, tested, and versioned together. Off-cluster building breaks that connection and leaves it up to the user to keep the OS up-to-date with the platform containers. We must make it easy for customers to add what they need and keep the OS image matched to the platform containers.

Goals & Requirements

- The goal of this feature is primarily to bring the 4.14 progress (

OCPSTRAT-35) to a Tech Preview or GA level of support. - Customers should be able to specify a Containerfile with their customizations and "forget it" as long as the automated builds succeed. If they fail, the admin should be alerted and pointed to the logs from the failed build.

- The admin should then be able to correct the build and resume the upgrade.

- Intersect with the Custom Boot Images such that a required custom software component can be present on every boot of every node throughout the installation process including the bootstrap node sequence (example: out-of-box storage driver needed for root disk).

- Users can return a pool to an unmodified image easily.

- RHEL entitlements should be wired in or at least simple to set up (once).

- Parity with current features – including the current drain/reboot suppression list, CoreOS Extensions, and config drift monitoring.

This work describes the tech preview state of On Cluster Builds. Major interfaces should be agreed upon at the end of this state.

Description of problem:

In clusters with OCB functionality enabled, sometimes the machine-os-builder pod is not restarted when we update the imageBuilderType. What we have observed is that the pod is restarted if a build is running, but it is not restarted if we are not building anything.

Version-Release number of selected component (if applicable):

$ oc get clusterversion NAME VERSION AVAILABLE PROGRESSING SINCE STATUS version 4.14.0-0.nightly-2023-09-12-195514 True False 88m Cluster version is 4.14.0-0.nightly-2023-09-12-195514

How reproducible:

Always

Steps to Reproduce:

1. Create the configuration resources needed by the OCB functionality.

To reproduce this issue we use an on-cluster-build-config configmap with an empty imageBuilderType

oc patch cm/on-cluster-build-config -n openshift-machine-config-operator -p '{"data":{"imageBuilderType": ""}}'

2. Create a infra pool and label it so that it can use OCB functionality

apiVersion: machineconfiguration.openshift.io/v1

kind: MachineConfigPool

metadata:

name: infra

spec:

machineConfigSelector:

matchExpressions:

- {key: machineconfiguration.openshift.io/role, operator: In, values: [worker,infra]}

nodeSelector:

matchLabels:

node-role.kubernetes.io/infra: ""

oc label mcp/infra machineconfiguration.openshift.io/layering-enabled=

3. Wait for the build pod to finish.

4. Once the build has finished and it has been cleaned, update the imageBuilderType so that we use "custom-pod-builder" type now.

oc patch cm/on-cluster-build-config -n openshift-machine-config-operator -p '{"data":{"imageBuilderType": "custom-pod-builder"}}'

Actual results:

We waited for one hour, but the pod is never restarted. $ oc get pods |grep build machine-os-builder-6cfbd8d5d-xk6c5 1/1 Running 0 56m $ oc logs machine-os-builder-6cfbd8d5d-xk6c5 |grep Type I0914 08:40:23.910337 1 helpers.go:330] imageBuilderType empty, defaulting to "openshift-image-builder" $ oc get cm on-cluster-build-config -o yaml |grep Type imageBuilderType: custom-pod-builder

Expected results:

When we update the imageBuilderType value, the machine-os-builder pod should be restarted.

Additional info:

Test and verify: MCO-1042: ocb-api implementation in MCO

Areas of concern:

- nodeController

- daemon

- testing suite

Done when:

MCO-1042 PR can be verified and merged.

Description of problem:

MachineConfigs that use 3.4.0 ignition with a kernelArguments are not currently allowed by MCO. In on-cluster build pools, when we create a 3.4.0 MC with kernelArguments, the pool is not degraded. No new rendered MC is created either.

Version-Release number of selected component (if applicable):

4.14.0-0.nightly-2023-09-06-065940

How reproducible:

Always

Steps to Reproduce:

1. Enable on-cluster build in the "worker" pool

2. Create a MC using 3.4.0 ignition version with kernelArguments

apiVersion: machineconfiguration.openshift.io/v1

kind: MachineConfig

metadata:

creationTimestamp: "2023-09-07T12:52:11Z"

generation: 1

labels:

machineconfiguration.openshift.io/role: worker

name: mco-tc-66376-reject-ignition-kernel-arguments-worker

resourceVersion: "175290"

uid: 10b81a5f-04ee-4d7b-a995-89f319968110

spec:

config:

ignition:

version: 3.4.0

kernelArguments:

shouldExist:

- enforcing=0

Actual results:

The build process is triggered and new image is built and deployed. The pool is never degraded.

Expected results:

MCs with igition 3.4.0 kernelArguments are not currently allowed. The MCP should be degraded reporting a message similar to this one (this is the error reported if we deploy the MC in the master pool, which is a normal pool):

oc get mcp -o yaml

....

- lastTransitionTime: "2023-09-07T12:16:55Z"

message: 'Node sregidor-s10-7pdvl-master-1.c.openshift-qe.internal is reporting:

"can''t reconcile config rendered-master-57e85ed95604e3de944b0532c58c385e with

rendered-master-24b982c8b08ab32edc2e84e3148412a3: ignition kargs section contains

changes"'

reason: 1 nodes are reporting degraded status on sync

status: "True"

type: NodeDegraded

Additional info:

When the image is deployed (it shouldn't be deployed) the kernel argument enforcing=0 is not present: sh-5.1# cat /proc/cmdline BOOT_IMAGE=(hd0,gpt3)/ostree/rhcos-05f51fadbc7fe74fa1e2ba3c0dbd0268c6996f0582c05dc064f137e93aa68184/vmlinuz-5.14.0-284.30.1.el9_2.x86_64 ostree=/ostree/boot.0/rhcos/05f51fadbc7fe74fa1e2ba3c0dbd0268c6996f0582c05dc064f137e93aa68184/0 ignition.platform.id=gcp console=tty0 console=ttyS0,115200n8 root=UUID=95083f10-c02f-4d94-a5c9-204481ce3a91 rw rootflags=prjquota boot=UUID=0440a909-3e61-4f7c-9f8e-37fe59150665 systemd.unified_cgroup_hierarchy=1 cgroup_no_v1=all psi=1

Description of problem:

When opting into on-cluster builds on both the worker and control plane MachineConfigPools, the maxUnavailable value on the MachineConfigPools is not respected when the newly built image is rolled out to all of the nodes in a given pool.

Version-Release number of selected component (if applicable):

How reproducible:

Sometimes reproducible. I'm still working on figuring out what conditions need to be present for this to occur.

Steps to Reproduce:

1. Opt an OpenShift cluster in on-cluster builds by following these instructions: https://github.com/openshift/machine-config-operator/blob/master/docs/OnClusterBuildInstructions.md

2. Ensure that both the worker and control plane MachineConfigPools are opted in.

Actual results:

Multiple nodes in both the control plane and worker MachineConfigPools are drained and cordoned simultaneously, irrespective of the maxUnavailable value. This is particularly problematic for control plane nodes since draining more than one control plane node at a time can cause etcd issues, in addition to PDBs (Pod Disruption Budgets) which can make the config change take substantially longer or block completely. I've mostly seen this issue affect control plane nodes, but I've also seen it impact both control plane and worker nodes.

Expected results:

I would have expected the new OS image to be rolled out in a similar fashion as new MachineConfigs are rolled out. In other words, a single node (or nodes up to maxUnavailable for non-control-plane nodes) is cordoned, drained, updated, and uncordoned at a time.

Additional info:

I suspect the bug may be someplace within the NodeController since that's the part of the MCO that controls which nodes update at a given time. That said, I've had difficulty reliably reproducing this issue, so finding a root cause could be more involved. This also seems to be mostly confined to the initial opt-in process. Subsequent updates seem to follow the original "rules" more closely.

The current OCB approach is a private MCO only API. making a public API would introduce the following benefits:

1. Transparent update information linked with the proposed MachineOSUpdater API

2. Follow the MCO migration to openshift/api. We should not have private APIs anymore in the MCO. Especially if the feature is publicly used.

3. Consolidate build information into one place that both the MCO and other users can pull from

the general proposal of changes here are as follows:

1. Move global build settings to ControllerConfig object or to this object. These include `finalImagePushSecret` and `finalImagePullspec`

2. create MachineOSBuild CRD which will included Dockerfile field, MachineConfig to build from etc.

3. Add these fields to MCP as well. Rather than thinking of this as two sources of truth, you can view the MCP fields as triggers to create or modify an existing MachineOSBuild object. This is similar to the mechanism that OpenShift BuildV1 uses with its BuildConfigs and Builds CRDs; the BuildConfig houses all of the necessary configs and a new Build is created with those configs. One does not need a BuildConfig to do a build, but one can use a BuildConfig to launch multiple builds.

Making these changes will enforce a system for builds rather than the appendage that the build API is currently to the MCO. The aim here is visibility rather than hidden operations.

Description of problem:

In a cluster with a pool using OCB functionality, if we update the imageBuilderType value while an openshift-image-builder pod is building an image, the build fails. It can fail in 2 ways: 1. Removing the running pod that is building the image, and what we get is a failed build reporting "Error (BuildPodDeleted)" 2. The machine-os-builder pod is restarted but the build pod is not removed. Then the build is never removed.

Version-Release number of selected component (if applicable):

$ oc get clusterversion NAME VERSION AVAILABLE PROGRESSING SINCE STATUS version 4.14.0-0.nightly-2023-09-12-195514 True False 154m Cluster version is 4.14.0-0.nightly-2023-09-12-195514

How reproducible:

Steps to Reproduce:

1. Create the needed resources to make OCB functionality work (on-cluster-build-config configmap, the secrets and the imageSpec)

We reproduced it using imageBuilderType=""

oc patch cm/on-cluster-build-config -n openshift-machine-config-operator -p '{"data":{"imageBuilderType": ""}}'

2. Create an infra pool and label it so that it can use OCB functionality

apiVersion: machineconfiguration.openshift.io/v1

kind: MachineConfigPool

metadata:

name: infra

spec:

machineConfigSelector:

matchExpressions:

- {key: machineconfiguration.openshift.io/role, operator: In, values: [worker,infra]}

nodeSelector:

matchLabels:

node-role.kubernetes.io/infra: ""

oc label mcp/infra machineconfiguration.openshift.io/layering-enabled=

3. Wait until the triggered build has finished.

4. Create a new MC to trigger a new build. This one, for example:

kind: MachineConfig

metadata:

labels:

machineconfiguration.openshift.io/role: worker

name: test-machine-config

spec:

config:

ignition:

version: 3.1.0

storage:

files:

- contents:

source: data:text/plain;charset=utf-8;base64,dGVzdA==

filesystem: root

mode: 420

path: /etc/test-file.test

5. Just after a new build pod is created, configure the on-cluster-build-config configmap to use the "custom-pod-builder" imageBuilderType

oc patch cm/on-cluster-build-config -n openshift-machine-config-operator -p '{"data":{"imageBuilderType": "custom-pod-builder"}}'

Actual results:

We have observed 2 behaviors after step 5: 1. The machine-os-builder pod is restarted and the build is never removed. build.build.openshift.io/build-rendered-infra-b2473d404d9ddfa1536d2fb32b54d855 Docker Dockerfile Running 10 seconds ago NAME READY STATUS RESTARTS AGE pod/build-rendered-infra-b2473d404d9ddfa1536d2fb32b54d855-build 1/1 Running 0 12s pod/machine-config-controller-5bdd7b66c5-dl4hh 2/2 Running 0 90m pod/machine-config-daemon-5wbw4 2/2 Running 0 90m pod/machine-config-daemon-fqr8x 2/2 Running 0 90m pod/machine-config-daemon-g77zd 2/2 Running 0 83m pod/machine-config-daemon-qzmvv 2/2 Running 0 83m pod/machine-config-daemon-w8mnz 2/2 Running 0 90m pod/machine-config-operator-7dd564556d-mqc5w 2/2 Running 0 92m pod/machine-config-server-28lnp 1/1 Running 0 89m pod/machine-config-server-5csjz 1/1 Running 0 89m pod/machine-config-server-fv4vk 1/1 Running 0 89m pod/machine-os-builder-6cfbd8d5d-2f7kd 0/1 Terminating 0 3m26s pod/machine-os-builder-6cfbd8d5d-h2ltd 0/1 ContainerCreating 0 1s NAME TYPE FROM STATUS STARTED DURATION build.build.openshift.io/build-rendered-infra-b2473d404d9ddfa1536d2fb32b54d855 Docker Dockerfile Running 12 seconds ago 2. The build pod is removed and the build fails with Error (BuildPodDeleted): NAME TYPE FROM STATUS STARTED DURATION build.build.openshift.io/build-rendered-infra-b2473d404d9ddfa1536d2fb32b54d855 Docker Dockerfile Running 10 seconds ago NAME READY STATUS RESTARTS AGE pod/build-rendered-infra-b2473d404d9ddfa1536d2fb32b54d855-build 1/1 Terminating 0 12s pod/machine-config-controller-5bdd7b66c5-dl4hh 2/2 Running 0 159m pod/machine-config-daemon-5wbw4 2/2 Running 0 159m pod/machine-config-daemon-fqr8x 2/2 Running 0 159m pod/machine-config-daemon-g77zd 2/2 Running 8 152m pod/machine-config-daemon-qzmvv 2/2 Running 16 152m pod/machine-config-daemon-w8mnz 2/2 Running 0 159m pod/machine-config-operator-7dd564556d-mqc5w 2/2 Running 0 161m pod/machine-config-server-28lnp 1/1 Running 0 159m pod/machine-config-server-5csjz 1/1 Running 0 159m pod/machine-config-server-fv4vk 1/1 Running 0 159m pod/machine-os-builder-6cfbd8d5d-g62b6 1/1 Running 0 2m11s NAME TYPE FROM STATUS STARTED DURATION build.build.openshift.io/build-rendered-infra-b2473d404d9ddfa1536d2fb32b54d855 Docker Dockerfile Running 12 seconds ago ..... NAME TYPE FROM STATUS STARTED DURATION build.build.openshift.io/build-rendered-infra-b2473d404d9ddfa1536d2fb32b54d855 Docker Dockerfile Error (BuildPodDeleted) 17 seconds ago 13s

Expected results:

Updating the imageBuilderType while a build is running should not result in the OCB functionlity in a broken status.

Additional info:

Must-gather files are provided in the first commen in this ticket.

There are a few situations in which a cluster admin might want to trigger a rebuild of their OS image in addition to situations where cluster state may dictate that we should perform a rebuild. For example, if the custom Dockerfile changes or the machine-config-osimageurl changes, it would be desirable to perform a rebuild in that case. To that end, this particular story covers adding the foundation for a rebuild mechanism in the form of an annotation that can be applied to the target MachineConfigPool. What is out of scope for this story is applying this annotation in response to a change in cluster state (e.g., custom Dockerfile change).

Done When:

- BuildController is aware of and recognizes a special annotation on layered MachineConfigPools (e.g., machineconfiguration.openshift.io/rebuildImage).

- Upon recognizing that a MachineConfigPool has this annotation, BuildController will clear any failed build attempts, delete any failed builds and their related ephemeral objects (e.g., rendered Dockerfile / MachineConfig ConfigMaps), and schedule a new build to be performed.

- This annotation should be removed when the build process completes, regardless of outcome. In other words, should the build success or fail, the annotation should be removed.

- [optional] BuildController keeps track of the number of retries for a given MachineConfigPool. This can occur via another annotation, e.g., machineconfiguration.openshift.io/buildRetries=1 . For now, this can be a hard-coded value (e.g., 5), but in the future, this could be wired up to an end-user facing knob. This annotation should be cleared upon a successful rebuild. If the rebuild is reached, then we should degrade.

Description of problem:

When a MCP has the on-cluster-build functionality enabled, when we configure a valid imageBuilderType in the on-cluster-build configmap, and later on we update this configmap with an invalid imageBuilderType the machine-config ClusterOperator is not degraded.

Version-Release number of selected component (if applicable):

$ oc get clusterversion NAME VERSION AVAILABLE PROGRESSING SINCE STATUS version 4.14.0-0.nightly-2023-09-12-195514 True False 3h56m Cluster version is 4.14.0-0.nightly-2023-09-12-195514

How reproducible:

Always

Steps to Reproduce:

1. Create a valid OCB configmap, and 2 valid secrets. Like this:

apiVersion: v1

data:

baseImagePullSecretName: mco-global-pull-secret

finalImagePullspec: quay.io/mcoqe/layering

finalImagePushSecretName: mco-test-push-secret

imageBuilderType: ""

kind: ConfigMap

metadata:

creationTimestamp: "2023-09-13T15:10:37Z"

name: on-cluster-build-config

namespace: openshift-machine-config-operator

resourceVersion: "131053"

uid: 1e0c66de-7a9a-4787-ab98-ce987a846f66

3. Label the "worker" MCP in order to enable the OCB functionality in it.

$ oc label mcp/worker machineconfiguration.openshift.io/layering-enabled=

4. Wait for the machine-os-builder pod to be created, and for the build to be finished. Just the wait for the pods, do not wait for the MCPs to be updated. As soon as the build pod has finished the build, go to step 5.

5. Patch the on-cluster-build configmap to use a valid imageBuilderType

oc patch cm/on-cluster-build-config -n openshift-machine-config-operator -p '{"data":{"imageBuilderType": "fake"}}'

Actual results:

The machine-os-builder pod crashes $ oc get pods NAME READY STATUS RESTARTS AGE machine-config-controller-5bdd7b66c5-6l7sz 2/2 Running 2 (45m ago) 63m machine-config-daemon-5ttqh 2/2 Running 0 63m machine-config-daemon-l95rj 2/2 Running 0 63m machine-config-daemon-swtc6 2/2 Running 2 57m machine-config-daemon-vq594 2/2 Running 2 57m machine-config-daemon-zrf4f 2/2 Running 0 63m machine-config-operator-7dd564556d-9smk4 2/2 Running 2 (45m ago) 65m machine-config-server-9sxjv 1/1 Running 0 62m machine-config-server-m5sdl 1/1 Running 0 62m machine-config-server-zb2hr 1/1 Running 0 62m machine-os-builder-6cfbd8d5d-t6g8w 0/1 CrashLoopBackOff 6 (3m11s ago) 9m16s But the machine-config ClusterOperator is not degraded $ oc get co machine-config NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE MESSAGE machine-config 4.14.0-0.nightly-2023-09-12-195514 True False False 63m

Expected results:

The machine-config ClusterOperator should become degraded when an invalid imageBuilderType is configured.

Additional info:

If we configure an invalid imageBuilderType directly (not by patching/editing the configmap), then the machine-config CO is degraded, but when we edit the configmap it is not. A link to the must-gather file is provided in the first comment in this issue PS: If we wait for the MCPs to be updated in step 4, the machine-os-builder pod is not restarted with the new "fake" imageBuilderType, but the machine-config CO is not degraded either, and it should. Does it make sense?

Only start the buildcontroller if the tech preview feature gate is enabled.

Proposed title of this feature request

Add support to OpenShift Telemetry to report the provider that has been added via "platform: external"

What is the nature and description of the request?

There is a new platform we have added support in OpenShift 4.14 called "external" which has been added for partners to enable and support their own integrations with OpenShift rather than making RH to develop and support this.

When deploying OpenShift using "platform: external: we don't have the ability right now to identify the provider where the platform has been deployed which is key for the product team to analyze demand and other metrics.

Why does the customer need this? (List the business requirements)

OpenShift Product Management needs this information to analyze adoption of these new platforms as well as other metrics specifically for these platforms to help us to make decisions for the product development.

List any affected packages or components.

Telemetry for OpenShift

There is some additional information in the following Slack thread --> https://redhat-internal.slack.com/archives/CEG5ZJQ1G/p1698758270895639

Feature Overview (aka. Goal Summary)

As a result of Hashicorp's license change to BSL, Red Hat OpenShift needs to remove the use of Hashicorp's Terraform from the installer – specifically for IPI deployments which currently use Terraform for setting up the infrastructure.

To avoid an increased support overhead once the license changes at the end of the year, we want to provision PowerVS infrastructure without the use of Terraform.

Requirements (aka. Acceptance Criteria):

- The PowerVS IPI Installer no longer contains or uses Terraform.

- The new provider should aim to provide the same results and have parity with the existing PowerVS Terraform provider. Specifically, we should aim for feature parity against the install config and the cluster it creates to minimize impact on existing customers' UX.

Use Cases (Optional):

Include use case diagrams, main success scenarios, alternative flow scenarios. Initial completion during Refinement status.

Questions to Answer (Optional):

Include a list of refinement / architectural questions that may need to be answered before coding can begin. Initial completion during Refinement status.

Out of Scope

High-level list of items that are out of scope. Initial completion during Refinement status.

Background

Provide any additional context is needed to frame the feature. Initial completion during Refinement status.

Customer Considerations

Provide any additional customer-specific considerations that must be made when designing and delivering the Feature. Initial completion during Refinement status.

Documentation Considerations

Provide information that needs to be considered and planned so that documentation will meet customer needs. If the feature extends existing functionality, provide a link to its current documentation. Initial completion during Refinement status.

Interoperability Considerations

Which other projects, including ROSA/OSD/ARO, and versions in our portfolio does this feature impact? What interoperability test scenarios should be factored by the layered products? Initial completion during Refinement status.

Feature Overview (aka. Goal Summary)

As a result of Hashicorp's license change to BSL, Red Hat OpenShift needs to remove the use of Hashicorp's Terraform from the installer – specifically for OpenStack deployments which currently use Terraform for setting up the infrastructure.

To avoid an increased support overhead once the license changes at the end of the year, we want to provision OpenStack infrastructure without the use of Terraform.

Requirements (aka. Acceptance Criteria):

- The OpenStack Installer no longer contains or uses Terraform.

- The new provider should aim to provide the same results and have parity with the existing OpenStack Terraform provider. Specifically, we should aim for feature parity against the install config and the cluster it creates to minimize impact on existing customers' UX.

Use Cases (Optional):

Include use case diagrams, main success scenarios, alternative flow scenarios. Initial completion during Refinement status.

Questions to Answer (Optional):

Include a list of refinement / architectural questions that may need to be answered before coding can begin. Initial completion during Refinement status.

Out of Scope

High-level list of items that are out of scope. Initial completion during Refinement status.

Background

Provide any additional context is needed to frame the feature. Initial completion during Refinement status.

Customer Considerations

Provide any additional customer-specific considerations that must be made when designing and delivering the Feature. Initial completion during Refinement status.

Documentation Considerations

Provide information that needs to be considered and planned so that documentation will meet customer needs. If the feature extends existing functionality, provide a link to its current documentation. Initial completion during Refinement status.

Interoperability Considerations

Which other projects, including ROSA/OSD/ARO, and versions in our portfolio does this feature impact? What interoperability test scenarios should be factored by the layered products? Initial completion during Refinement status.

Goal

- Create cluster and OpenStackCluster resource for the install-config.yaml

- Create OpenStackMachine

- Remove terraform dependency for OpenStack

Why is this important?

- To have a CAPO cluster functionally equivalent to the installer

Scenarios

\

- ...

Acceptance Criteria

- CI - MUST be running successfully with tests automated

- Release Technical Enablement

- ...

Dependencies (internal and external)

- ...

Previous Work (Optional):

- …

Open questions::

- …

Rebase Installer onto the development branch of cluster-api-provider-openstack to provide CI signal to the CAPO maintainers.

Right now when trying one installation with this work https://github.com/openshift/installer/pull/7939 the bootstrap machine is not getting deleted. We need to ensure it's gone once bootstrap is finalized.

Essentially: bring the upstream-master branch of shiftstack/cluster-api-provider-openstack under the github.com/openshift organisation.

We need to get CI on this PR in good shape https://github.com/openshift/installer/pull/7939 so we can look for reviews

This is needed to identify if masters are schedulable and to upload the rhos image to glance.

- Update CAPO manifests in Installer to v1beta1

- Update the vendored operator to v0.10

Feature Overview (aka. Goal Summary)

Today we expose two main APIs for HyperShift, namely `HostedCluster` and `NodePool`. We also have metrics to gauge adoption by reporting the # of hosted clusters and nodepools.

But we are still missing other metrics to be able to make correct inference about what we see in the data.

Goals (aka. expected user outcomes)

- Provide Metrics to highlight # of Nodes per NodePool or # of Nodes per cluster

- Make sure the error between what appears in CMO via `install_type` and what we report as # Hosted Clusters is minimal.

Use Cases (Optional):

- Understand product adoption

- Gauge Health of deployments

- ...

Overview

Today we have hypershift_hostedcluster_nodepools as a metric exposed to provide information on the # of nodepools used per cluster.

Additional NodePools metrics such as hypershift_nodepools_size and hypershift_nodepools_available_replicas are available but not ingested in Telemetry.

In addition to knowing how many nodepools per hosted cluster, we would like to expose the knowledge of the nodepool size.

This will help inform our decision making and provide some insights on how the product is being adopted/used.

Goals

The main goal of this epic is to show the following NodePools metrics on Telemeter, ideally as recording rules:

- Hypershift_nodepools_size

- hypershift_nodepools_available_replicas

Requirements

The implementation involves creating updates to the following GitHub repositories:

similar PRs:

https://github.com/openshift/hypershift/pull/1544

https://github.com/openshift/cluster-monitoring-operator/pull/1710

Feature Overview (aka. Goal Summary)

As a result of Hashicorp's license change to BSL, Red Hat OpenShift needs to remove the use of Hashicorp's Terraform from the installer - specifically for IPI deployments which currently use Terraform for setting up the infrastructure.

To avoid an increased support overhead once the license changes at the end of the year, we want to provision GCP infrastructure without the use of Terraform.

Requirements (aka. Acceptance Criteria):

- The GCP IPI Installer no longer contains or uses Terraform.

- The new provider should aim to provide the same results and have parity with the existing GCP Terraform provider. Specifically, we should aim for feature parity against the install config and the cluster it creates to minimize impact on existing customers' UX.

Use Cases (Optional):

Include use case diagrams, main success scenarios, alternative flow scenarios. Initial completion during Refinement status.

Questions to Answer (Optional):

Include a list of refinement / architectural questions that may need to be answered before coding can begin. Initial completion during Refinement status.

Out of Scope

High-level list of items that are out of scope. Initial completion during Refinement status.

Background

Provide any additional context is needed to frame the feature. Initial completion during Refinement status.

Customer Considerations

Provide any additional customer-specific considerations that must be made when designing and delivering the Feature. Initial completion during Refinement status.

Documentation Considerations

Provide information that needs to be considered and planned so that documentation will meet customer needs. If the feature extends existing functionality, provide a link to its current documentation. Initial completion during Refinement status.

Interoperability Considerations

Which other projects, including ROSA/OSD/ARO, and versions in our portfolio does this feature impact? What interoperability test scenarios should be factored by the layered products? Initial completion during Refinement status.

OCP/Telco Definition of Done

Epic Template descriptions and documentation.

<--- Cut-n-Paste the entire contents of this description into your new Epic --->

Epic Goal

- Provision GCP infrastructure without the use of Terraform

Why is this important?

- Removing Terraform from Installer

Scenarios

- The new provider should aim to provide the same results as the existing GCP

Acceptance Criteria

- CI - MUST be running successfully with tests automated

- Release Technical Enablement - Provide necessary release enablement details and documents.

- ...

Dependencies (internal and external)

- ...

Previous Work (Optional):

- …

Open questions::

- …

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

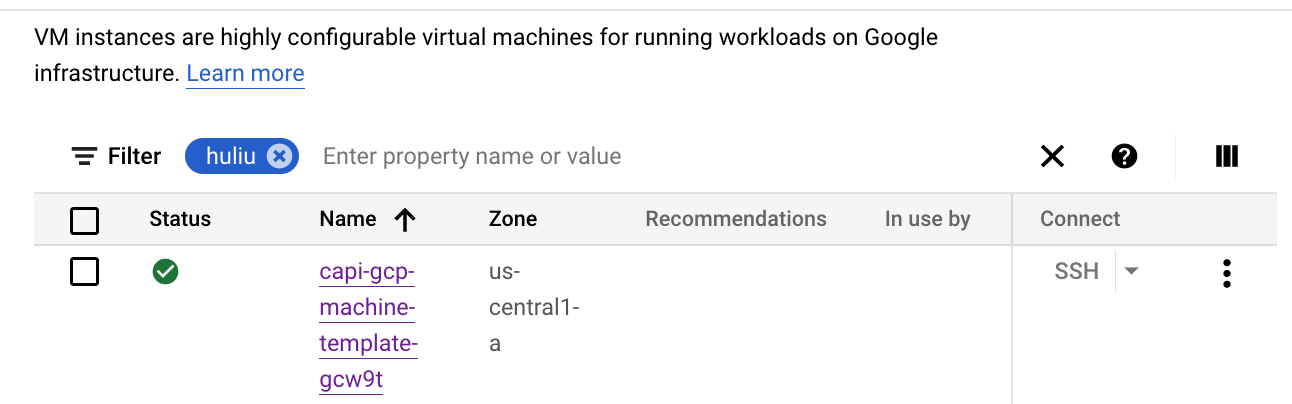

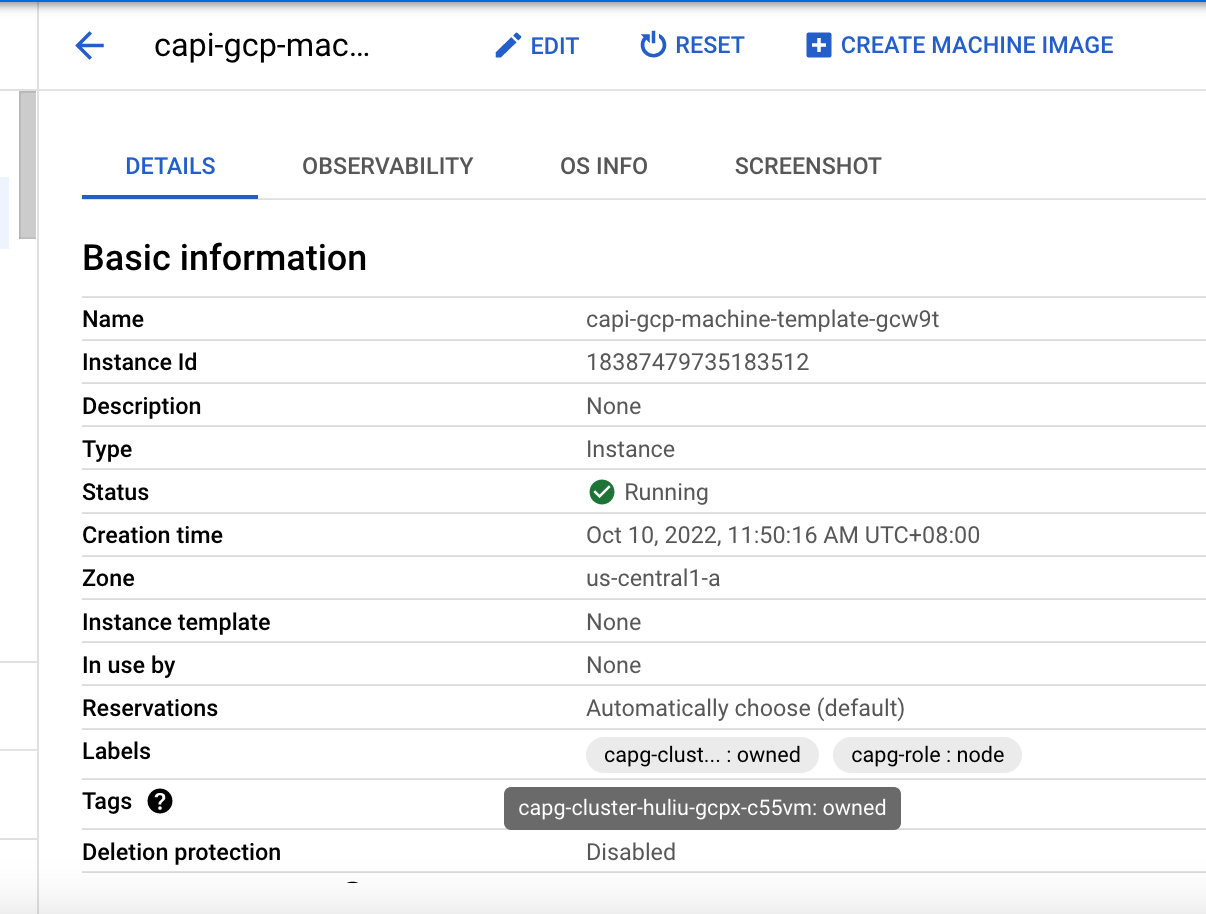

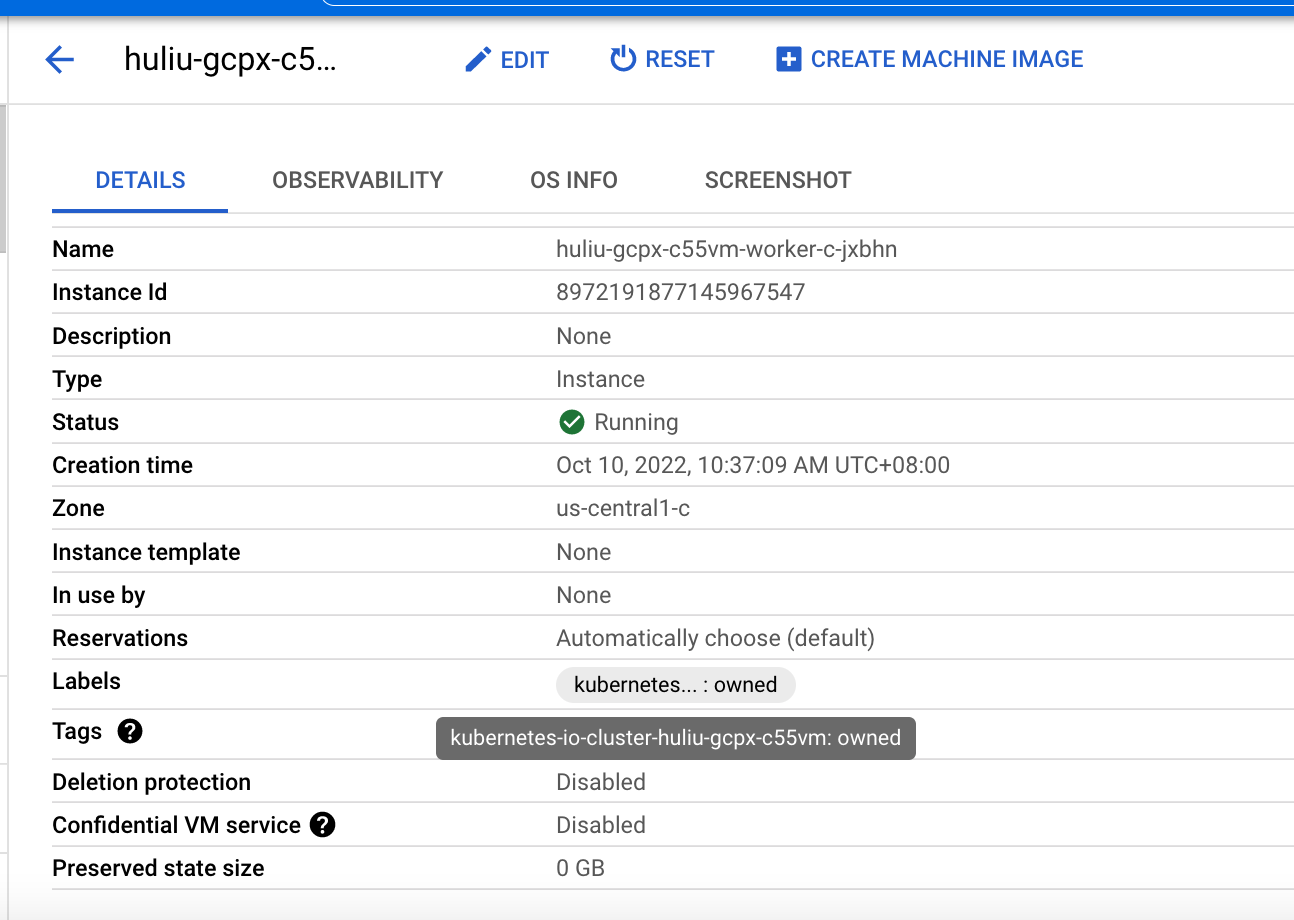

When testing GCP using the CAPG provider (not Terraform) in 4.16, it was found that the master VM instances were not distributed across instance groups but were all assigned to the same instance group.

Here is a (partial) CAPG install vs a installation completed using Terraform. The capg installation (bfournie-capg-test-5ql8j) has VMs all using us-east1-b

$ gcloud compute instances list | grep bfournie bfournie-capg-test-5ql8j-bootstrap us-east1-b n2-standard-4 10.0.0.4 34.75.212.239 RUNNING bfournie-capg-test-5ql8j-master-0 us-east1-b n2-standard-4 10.0.0.5 RUNNING bfournie-capg-test-5ql8j-master-1 us-east1-b n2-standard-4 10.0.0.6 RUNNING bfournie-capg-test-5ql8j-master-2 us-east1-b n2-standard-4 10.0.0.7 RUNNING bfournie-test-tf-pdrsw-master-0 us-east4-a n2-standard-4 10.0.0.4 RUNNING bfournie-test-tf-pdrsw-worker-a-vxjbk us-east4-a n2-standard-4 10.0.128.2 RUNNING bfournie-test-tf-pdrsw-master-1 us-east4-b n2-standard-4 10.0.0.3 RUNNING bfournie-test-tf-pdrsw-worker-b-ksxfg us-east4-b n2-standard-4 10.0.128.3 RUNNING bfournie-test-tf-pdrsw-master-2 us-east4-c n2-standard-4 10.0.0.5 RUNNING bfournie-test-tf-pdrsw-worker-c-jpzd5 us-east4-c n2-standard-4 10.0.128.4 RUNNING

User Story:

As a (user persona), I want to be able to:

- Ensure that when the cluster should use CAPI installs that the correct path is chosen. AKA: no longer use terraform for installations in GCP when the user has selected the featureSet for CAPI.

so that I can achieve

- CAPI gcp installation

Acceptance Criteria:

Description of criteria:

- Upstream documentation

- Point 1

- Point 2

- Point 3

(optional) Out of Scope:

Detail about what is specifically not being delivered in the story

Engineering Details:

- (optional) https://github/com/link.to.enhancement/

- (optional) https://issues.redhat.com/link.to.spike

- Engineering detail 1

- Engineering detail 2

![]() This requires/does not require a design proposal.

This requires/does not require a design proposal.

![]() This requires/does not require a feature gate.

This requires/does not require a feature gate.

When using the CAPG provider the ServiceAccounts created by the installer for the master and worker nodes do not have the role bindings added correctly.

For example this query shows that the SA for the master nodes has no role bindings.

$ gcloud projects get-iam-policy openshift-dev-installer --flatten="bindings[].members" --format='table(bindings.role)' --filter='bindings.members:bfournie-capg-test-lk5t5-m@openshift-dev-installer.iam.gserviceaccount.com' $

User Story:

I want to destroy the load balancers created by capg

Acceptance Criteria:

Description of criteria:

- destroy deletes all capg related resources

- backwards compatible: continues to destroy terraform clusters

- Point 2

- Point 3

(optional) Out of Scope:

Detail about what is specifically not being delivered in the story

Engineering Details:

- CAPG creates: global proxy load balancers

- our destroy code only looks for regional passthrough load balancers

- need to:

- update destroy code to not look at regional load balancers (only)

- destroy tcp proxy resource, which is not created in passthrough load balancers, so is not currently deleted

- Engineering detail 2

![]() This requires/does not require a design proposal.

This requires/does not require a design proposal.

![]() This requires/does not require a feature gate.

This requires/does not require a feature gate.

CAPG has been updated to 1.6, see https://github.com/kubernetes-sigs/cluster-api-provider-gcp/releases/tag/v1.6.0

We need to pick this up to get the latest features including disk encryption.

Machines for GCP need to be generated for use in CAPI. This will be similar to the AWS machine implementation

(https://github.com/openshift/installer/blob/master/pkg/asset/machines/aws/awsmachines.go) added in

https://github.com/openshift/installer/pull/7771

User Story:

I want to create the public and private DNS records using one of the CAPI interface SDK hooks.

Acceptance Criteria:

Description of criteria:

- Vanilla install: create public DNS record, private zone, private record

- Shared VPC: check for existing private DNS zone

- Publish Internal: create private zone, private record

- Custom DNS scenario: create no records, or zone

(optional) Out of Scope:

Detail about what is specifically not being delivered in the story

Engineering Details:

- Value for DNS record for capi-provisioned LB, can be grabbed from cluster manifest

- The value for the SDK provisioned LB will need to be handled within our hook

![]() This requires/does not require a design proposal.

This requires/does not require a design proposal.

![]() This requires/does not require a feature gate.

This requires/does not require a feature gate.

As an installer user, I want my gcp creds used for install to be used by the CAPG controller when provisioning resources.

Acceptance Criteria:

- Users can authenticate using the service account from ~/.gcp/osServiceAccount.json

- users can authenticate with default application credentials

- Docs team is updated to whether existing credential methods will continue to work (specifically environment variables): see official docs

Engineering Details:

- We can pass in a secret containing the service account creds, so we can do t hat during the manifest stage, which is probably a more appropriate place

- The controller supports auth'ing with default application credentials when there is no secret supplied, so that's good. That should work for use cases where they don't want service account

- GCP controller authentication is handled here: https://github.com/kubernetes-sigs/cluster-api-provider-gcp/blob/main/cloud/scope/managedcontrolplane.go#L67

![]() This requires/does not require a design proposal.

This requires/does not require a design proposal.

![]() This requires/does not require a feature gate.

This requires/does not require a feature gate.

When installing on GCP, I want control-plane (including bootstrap) machines to bootstrap using ignition.

I want bootstrap ignition to be secured so that sensitive data is not publicly available.

Acceptance Criteria:

Description of criteria:

- Control-plane machines pull ignition (boot successfully)

- Bootstrap ignition is not public (typically signed url)

- Service account is not required for signed url (stretch goal)

- Should be labeled (with owned and user tags)

(optional) Out of Scope:

Destroying bootstrap ignition can be handled separately.

Engineering Details:

- CAPG does not support ignition, so we will need to determine what to pass in Bootstrap to allow passing ignition stub in the user data.

- terraform: https://github.com/openshift/installer/blob/master/data/data/gcp/cluster/master/main.tf#L85

- create storage bucket

- upload bootstrap ignition to object in storage bucket

- create signed url for object

- pass signed url in ignition stub

![]() This requires/does not require a design proposal.

This requires/does not require a design proposal.

![]() This requires/does not require a feature gate.

This requires/does not require a feature gate.

https://issues.redhat.com/browse/CORS-3217 covers the upstream chagnes to CAPG needed to add disk encrytion. In addition changes will be needed in the installer to set the GCPMachine disk encryption based on the machinepool settings.

Notes on the required changes are at https://docs.google.com/document/d/1kVgqeCcPOrq4wI5YgcTZKuGJo628dchjqCrIrVDS83w/edit?usp=sharing

Once the upstream changes from CORS-3217 have been accepted:

- plumb through existing encryption key fields

- vendor updated CAPG

- update infrastructure-components.yaml (CRD definitions) if necessary

User Story:

I want to create a load balancer to provide split-horizon DNS for the cluster.

Acceptance Criteria:

Description of criteria:

- In a vanilla install, we have both ext and int load balancers

- Point 1

- Point 2

- Point 3

(optional) Out of Scope:

Detail about what is specifically not being delivered in the story

Engineering Details:

- (optional) https://issues.redhat.com/link.to.spike

- Engineering detail 1

- Engineering detail 2

![]() This requires/does not require a design proposal.

This requires/does not require a design proposal.

![]() This requires/does not require a feature gate.

This requires/does not require a feature gate.

Description of problem:

The bootstrap machine never contains a public IP address. When the publish strategy is set to External, the bootstrap machine should contain a public ip address.

Version-Release number of selected component (if applicable):

How reproducible:

always

Steps to Reproduce:

1.

2.

3.

Actual results:

Expected results:

Additional info:

Create the GCP Infrastructure controller in /pkg/clusterapi/system.go.

It will be based on the AWS controller in that file, which was added in https://github.com/openshift/installer/pull/7630.

User Story:

I want the installer to create the service accounts that would be assigned to control plane and compute machines, similar to what is done in terraform now.

Acceptance Criteria:

Description of criteria:

- Control plane and compute service accounts are created with appropriate permissions (see details)

- Service accounts are attached to machines

- Skip creation of control-plane service accounts when they are specified in the install config

(optional) Out of Scope:

Detail about what is specifically not being delivered in the story

Engineering Details:

- Service accounts are needed by the machines, so they could be created in either PreProvision or InfraReady

- compute node service account permissions are captured here: https://github.com/openshift/installer/blob/master/data/data/gcp/cluster/iam/main.tf

- control plane iam is here:

https://github.com/openshift/installer/blob/master/data/data/gcp/cluster/master/main.tf#L5-L38 - The service accounts should be specified in the machine spec.

![]() This requires/does not require a design proposal.

This requires/does not require a design proposal.

![]() This requires/does not require a feature gate.

This requires/does not require a feature gate.

When a GCP cluster is created using CAPI, upon destroy the addresses associated with the apiserver LoadBalancer are not removed. For example here are addresses left over after previous installations

$ gcloud compute addresses list --uri | grep bfournie https://www.googleapis.com/compute/v1/projects/openshift-dev-installer/global/addresses/bfournie-capg-test-27kzq-apiserver https://www.googleapis.com/compute/v1/projects/openshift-dev-installer/global/addresses/bfournie-capg-test-6jrwz-apiserver https://www.googleapis.com/compute/v1/projects/openshift-dev-installer/global/addresses/bfournie-capg-test-gn6g7-apiserver https://www.googleapis.com/compute/v1/projects/openshift-dev-installer/global/addresses/bfournie-capg-test-h96j2-apiserver https://www.googleapis.com/compute/v1/projects/openshift-dev-installer/global/addresses/bfournie-capg-test-k7fdj-apiserver https://www.googleapis.com/compute/v1/projects/openshift-dev-installer/global/addresses/bfournie-capg-test-nh4z5-apiserver https://www.googleapis.com/compute/v1/projects/openshift-dev-installer/global/addresses/bfournie-capg-test-nls2h-apiserver https://www.googleapis.com/compute/v1/projects/openshift-dev-installer/global/addresses/bfournie-capg-test-qrhmr-apiserver

Here is one of the addresses:

$ gcloud compute addresses describe https://www.googleapis.com/compute/v1/projects/openshift-dev-installer/global/addresses/bfournie-capg-test-27kzq-apiserver address: 34.107.255.76 addressType: EXTERNAL creationTimestamp: '2024-04-15T15:17:56.626-07:00' description: '' id: '2697572183218067835' ipVersion: IPV4 kind: compute#address labelFingerprint: 42WmSpB8rSM= name: bfournie-capg-test-27kzq-apiserver networkTier: PREMIUM selfLink: https://www.googleapis.com/compute/v1/projects/openshift-dev-installer/global/addresses/bfournie-capg-test-27kzq-apiserver status: RESERVED [bfournie@bfournie installer-patrick-new]$ gcloud compute addresses describe https://www.googleapis.com/compute/v1/projects/openshift-dev-installer/global/addresses/bfournie-capg-test-6jrwz-apiserver address: 34.149.208.133 addressType: EXTERNAL creationTimestamp: '2024-03-27T09:35:00.607-07:00' description: '' id: '1650865645042660443' ipVersion: IPV4 kind: compute#address labelFingerprint: 42WmSpB8rSM= name: bfournie-capg-test-6jrwz-apiserver networkTier: PREMIUM selfLink: https://www.googleapis.com/compute/v1/projects/openshift-dev-installer/global/addresses/bfournie-capg-test-6jrwz-apiserver status: RESERVED

Now that https://issues.redhat.com/browse/CORS-3447 providing the ability to override the APIServer instance group to be compatible with MAPI, we need to set the override in the installer when the Internal LoadBalancer is created.

User Story:

As a (user persona), I want to be able to:

- Create the GCP cluster manifest for CAPI installs

so that I can achieve

- The manifests in <assets-dir>/cluster-api will be applied to bootstrap ignition and the files will find their way to the machines.

Acceptance Criteria:

Description of criteria:

- Upstream documentation

- Point 1

- Point 2

- Point 3

(optional) Out of Scope:

Detail about what is specifically not being delivered in the story

Engineering Details:

- (optional) https://github/com/link.to.enhancement/

- (optional) https://issues.redhat.com/link.to.spike

- Engineering detail 1

- Engineering detail 2

![]() This requires/does not require a design proposal.

This requires/does not require a design proposal.

![]() This requires/does not require a feature gate.

This requires/does not require a feature gate.

once e2e tests are passing, include gcp capi installer in tech preview feature set.

similar to https://github.com/openshift/api/pull/1880

When GCP workers are created they are not able to pull ignition over the internal subnet as its not allowed by the firewall rules created by CAPG. The allow-<infraID>-cluster allows all TCP traffic with tags for <infraID>-node and <infraID>-control-plane but the workers that are created have tags <infraID>-worker.

We need to either add the worker tags to this firewall rule or add node tags to the worker. We should decide on a general use of CAPG firewall rules.

Feature Overview (aka. Goal Summary)

As a result of Hashicorp's license change to BSL, Red Hat OpenShift needs to remove the use of Hashicorp's Terraform from the installer – specifically for IPI deployments which currently use Terraform for setting up the infrastructure.

To avoid an increased support overhead once the license changes at the end of the year, we want to provision AWS infrastructure without the use of Terraform.

Requirements (aka. Acceptance Criteria):

- The AWS IPI Installer no longer contains or uses Terraform.

- The new provider should aim to provide the same results and have parity with the existing AWS Terraform provider. Specifically, we should aim for feature parity against the install config and the cluster it creates to minimize impact on existing customers' UX.

Use Cases (Optional):

Include use case diagrams, main success scenarios, alternative flow scenarios. Initial completion during Refinement status.

Questions to Answer (Optional):

Include a list of refinement / architectural questions that may need to be answered before coding can begin. Initial completion during Refinement status.

Out of Scope

High-level list of items that are out of scope. Initial completion during Refinement status.

Background

Provide any additional context is needed to frame the feature. Initial completion during Refinement status.

Customer Considerations

Provide any additional customer-specific considerations that must be made when designing and delivering the Feature. Initial completion during Refinement status.

Documentation Considerations

Provide information that needs to be considered and planned so that documentation will meet customer needs. If the feature extends existing functionality, provide a link to its current documentation. Initial completion during Refinement status.

Interoperability Considerations

Which other projects, including ROSA/OSD/ARO, and versions in our portfolio does this feature impact? What interoperability test scenarios should be factored by the layered products? Initial completion during Refinement status.

Epic Goal

- Provision AWS infrastructure without the use of Terraform

Why is this important?

- This is a key piece in producing a terraform-free binary for ROSA. See parent epic for more details.

Scenarios

- The new provider should aim to provide the same results as the existing AWS terraform provider.

Acceptance Criteria

- CI - MUST be running successfully with tests automated

- Release Technical Enablement - Provide necessary release enablement details and documents.

- ...

Dependencies (internal and external)

- ...

Previous Work (Optional):

Open questions::

- …

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

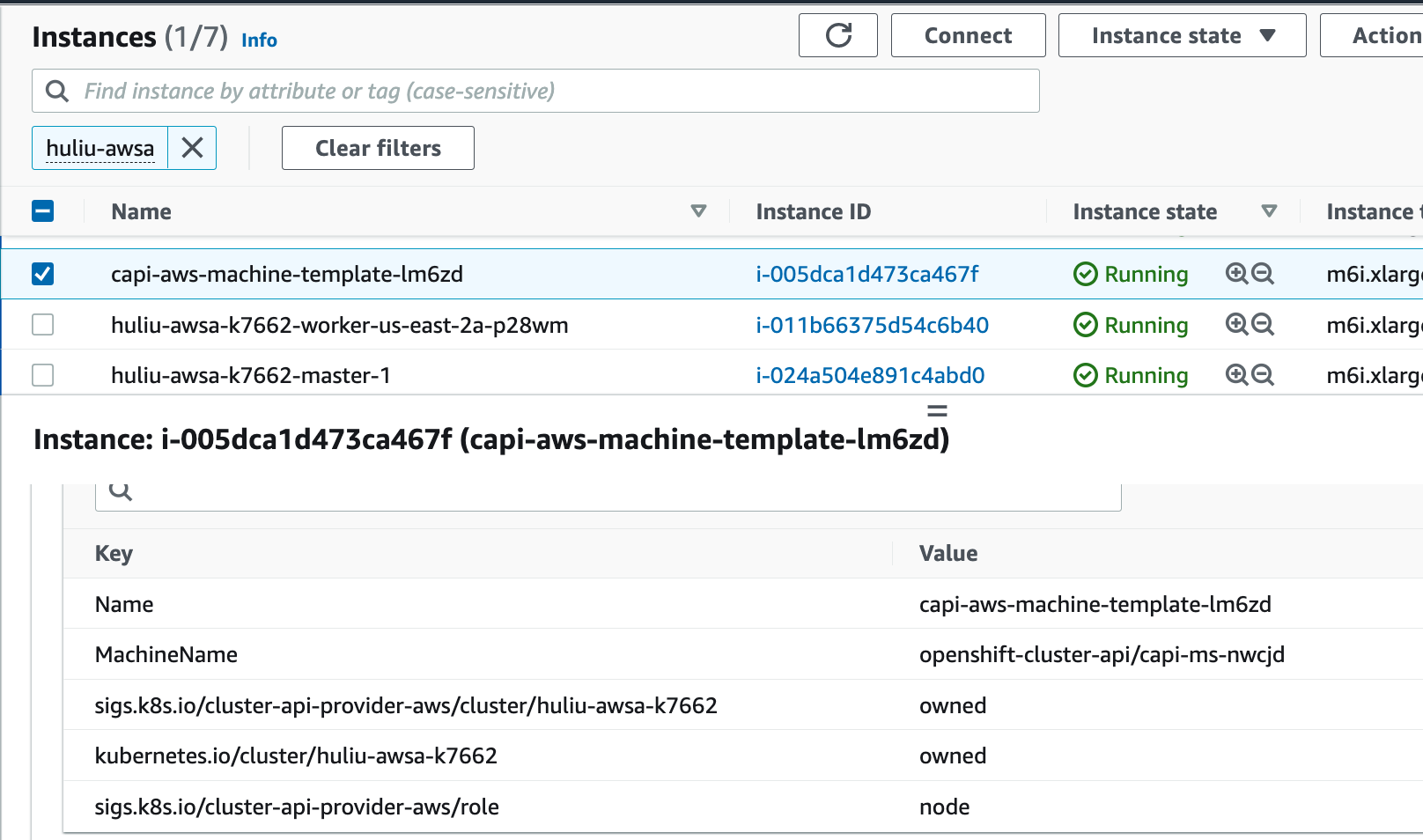

Use cases to ensure:

- When installconfig.controlPlane.platform.aws.zones is specified, control plane nodes are correctly placed in the zones.

- When installconfig.controlPlane.platform.aws.zones isn't specified, the control plane nodes are correctly balanced in the zones available in the region, preventing single-zone when possible.

User Story:

As a (user persona), I want to be able to:

- Deploy AWS cluster with IPI with minimum customizations without terraform

Acceptance Criteria:

Description of criteria:

- Install complete, e2e pass

- Production-ready

- Point 2

- Point 3

(optional) Out of Scope:

Detail about what is specifically not being delivered in the story

Engineering Details:

- PoC implementation here: https://github.com/openshift/installer/pull/7879

![]() This requires/does not require a design proposal.

This requires/does not require a design proposal.

![]() This requires/does not require a feature gate.

This requires/does not require a feature gate.

iam role is correctly attached to control plane node when installconfig.controlPlane.platform.aws.iamRole is specified

User Story:

As a (user persona), I want to be able to:

- make sure ignition respects proxy configuration

Acceptance Criteria:

Description of criteria:

- Upstream documentation

- Point 1

- Point 2

- Point 3

(optional) Out of Scope:

Detail about what is specifically not being delivered in the story

Engineering Details:

- (optional) https://github/com/link.to.enhancement/

- (optional) https://issues.redhat.com/link.to.spike

- Engineering detail 1

- Engineering detail 2

![]() This requires/does not require a design proposal.

This requires/does not require a design proposal.

![]() This requires/does not require a feature gate.

This requires/does not require a feature gate.

when installconfig.controlPlane.platform.aws.metadataService is set, the metadataservice is correctly configured for control plane machines

security group ids are added to control plane nodes when installconfig.controlPlane.platform.aws.additionalSecurityGroupIDs is specified

User Story:

As a (user persona), I want to be able to:

- implement custom endpoint support if it's still needed.

so that I can achieve

- Outcome 1

- Outcome 2

- Outcome 3

Acceptance Criteria:

Description of criteria:

- Upstream documentation

- Point 1

- Point 2

- Point 3

(optional) Out of Scope:

Detail about what is specifically not being delivered in the story

Engineering Details:

- (optional) https://github/com/link.to.enhancement/

- (optional) https://issues.redhat.com/link.to.spike

- Engineering detail 1

- Engineering detail 2

![]() This requires/does not require a design proposal.

This requires/does not require a design proposal.

![]() This requires/does not require a feature gate.

This requires/does not require a feature gate.

CAPA shows

I0312 18:00:13.602972 109 s3.go:220] "Deleting S3 object" controller="awsmachine" controllerGroup="infrastructure.cluster.x-k8s.io" controllerKind="AWSMachine" AWSMachine="openshift-cluster-api-guests/rdossant-installer-03-jjf6b-master-2" namespace="openshift-cluster-api-guests" name="rdossant-installer-03-jjf6b-master-2" reconcileID="9cda22be-5acd-4670-840f-8a6708437385" machine="openshift-cluster-api-guests/rdossant-installer-03-jjf6b-master-2" cluster="openshift-cluster-api-guests/rdossant-installer-03-jjf6b" bucket="openshift-bootstrap-data-rdossant-installer-03-jjf6b" key="control-plane/rdossant-installer-03-jjf6b-master-2" I0312 18:00:13.608919 109 s3.go:220] "Deleting S3 object" controller="awsmachine" controllerGroup="infrastructure.cluster.x-k8s.io" controllerKind="AWSMachine" AWSMachine="openshift-cluster-api-guests/rdossant-installer-03-jjf6b-master-0" namespace="openshift-cluster-api-guests" name="rdossant-installer-03-jjf6b-master-0" reconcileID="1ed0ad52-ffc1-4b62-97e4-876f8e8c3242" machine="openshift-cluster-api-guests/rdossant-installer-03-jjf6b-master-0" cluster="openshift-cluster-api-guests/rdossant-installer-03-jjf6b" bucket="openshift-bootstrap-data-rdossant-installer-03-jjf6b" key="control-plane/rdossant-installer-03-jjf6b-master-0" [...] E0312 18:04:25.282967 109 awsmachine_controller.go:576] "controllers/AWSMachine: unable to delete secrets" err=< deleting bootstrap data object: deleting S3 object: NotFound: Not Found status code: 404, request id: 9QYY3QSWKBBDZ7R8, host id: 2f3HawFbPheaptP9E+WRbu3fhEXTMwyZQ1DBPGBG7qlg74ssQR0XISM4OSlxvrn59GeFREtN4hp9C+S5LgQD2g== > E0312 18:04:25.284197 109 controller.go:329] "Reconciler error" err=< deleting bootstrap data object: deleting S3 object: NotFound: Not Found status code: 404, request id: 9QYY3QSWKBBDZ7R8, host id: 2f3HawFbPheaptP9E+WRbu3fhEXTMwyZQ1DBPGBG7qlg74ssQR0XISM4OSlxvrn59GeFREtN4hp9C+S5LgQD2g== > controller="awsmachine" controllerGroup="infrastructure.cluster.x-k8s.io" controllerKind="AWSMachine" AWSMachine="openshift-cluster-api-guests/rdossant-installer-03-jjf6b-master-0" namespace="openshift-cluster-api-guests" name="rdossant-installer-03-jjf6b-master-0" reconcileID="7fac94a1-772a-4c7b-a631-5ef7fc015d5b" E0312 18:04:25.286152 109 awsmachine_controller.go:576] "controllers/AWSMachine: unable to delete secrets" err=< deleting bootstrap data object: deleting S3 object: NotFound: Not Found status code: 404, request id: 9QYPFY0EQBM42VYH, host id: nJZakAhLrbZ1xrSNX3tyk0IKmMgFjsjMSs/D9nzci90GfRNNfUnvwZTbcaUBQYiuSlY5+aysCuwejWpvi8FmGusbQCK1Qtjr9pjqDQfxzY4= > E0312 18:04:25.287353 109 controller.go:329] "Reconciler error" err=< deleting bootstrap data object: deleting S3 object: NotFound: Not Found status code: 404, request id: 9QYPFY0EQBM42VYH, host id: nJZakAhLrbZ1xrSNX3tyk0IKmMgFjsjMSs/D9nzci90GfRNNfUnvwZTbcaUBQYiuSlY5+aysCuwejWpvi8FmGusbQCK1Qtjr9pjqDQfxzY4= > controller="awsmachine" controllerGroup="infrastructure.cluster.x-k8s.io" controllerKind="AWSMachine" AWSMachine="openshift-cluster-api-guests/rdossant-installer-03-jjf6b-master-2" namespace="openshift-cluster-api-guests" name="rdossant-installer-03-jjf6b-master-2" reconcileID="b6c792ad-5519-48d5-a994-18dda76d8a93" E0312 18:04:25.291383 109 awsmachine_controller.go:576] "controllers/AWSMachine: unable to delete secrets" err=< deleting bootstrap data object: deleting S3 object: NotFound: Not Found status code: 404, request id: 9QYGWSJDR35Q4GWX, host id: Qnltg++ia3VapXjtENZOQIwfAxbxfwVLPlC0DwcRBx+L60h52ENiNqMOkvuNwJyYnPxbo/CaawzMT11oIKGO9g== > E0312 18:04:25.292132 109 controller.go:329] "Reconciler error" err=< deleting bootstrap data object: deleting S3 object: NotFound: Not Found status code: 404, request id: 9QYGWSJDR35Q4GWX, host id: Qnltg++ia3VapXjtENZOQIwfAxbxfwVLPlC0DwcRBx+L60h52ENiNqMOkvuNwJyYnPxbo/CaawzMT11oIKGO9g== > controller="awsmachine" controllerGroup="infrastructure.cluster.x-k8s.io" controllerKind="AWSMachine" AWSMachine="openshift-cluster-api-guests/rdossant-installer-03-jjf6b-master-1" namespace="openshift-cluster-api-guests" name="rdossant-installer-03-jjf6b-master-1" reconcileID="92e1f8ed-b31f-4f75-9083-59aad15efe79" E0312 18:04:25.679859 109 awsmachine_controller.go:576] "controllers/AWSMachine: unable to delete secrets" err=< deleting bootstrap data object: deleting S3 object: NotFound: Not Found status code: 404, request id: 9QYSBZGYPC7SNJEX, host id: EplmtNQ+RxmbU88z+4App6YEVvniJpyCeMiMZuUegJIMqZgbkA1lmCjHntSLDm4eA857OdhtHsn+zD6AX7uelGIsogzN2ZziiAZXZrbIIEg= > E0312 18:04:25.680663 109 controller.go:329] "Reconciler error" err=< deleting bootstrap data object: deleting S3 object: NotFound: Not Found status code: 404, request id: 9QYSBZGYPC7SNJEX, host id: EplmtNQ+RxmbU88z+4App6YEVvniJpyCeMiMZuUegJIMqZgbkA1lmCjHntSLDm4eA857OdhtHsn+zD6AX7uelGIsogzN2ZziiAZXZrbIIEg= > controller="awsmachine" controllerGroup="infrastructure.cluster.x-k8s.io" controllerKind="AWSMachine" AWSMachine="openshift-cluster-api-guests/rdossant-installer-03-jjf6b-master-0" namespace="openshift-cluster-api-guests" name="rdossant-installer-03-jjf6b-master-0" reconcileID="9e436c67-aca0-409c-9179-0ce4cccce9ad"

Even though we are not creating s3 buckets for the master nodes. That's preventing the bootstrap process from finishing.

Because of the assumption that subnets have auto-assign public IPs turned on, which is how CAPA configures the subnets it creates, supplying your own VPC where that is not the case causes the bootstrap node to not get a public IP and therefore not be able to download the release image (no internet connection).

The bootstrap node needs a public IP because the public subnets are connected only to the internet gateway, which does not provide NAT.

User Story:

Destroy all bootstrap resources created through the new non-terraform provider.

Acceptance Criteria:

Description of criteria:

- Upstream documentation

- Point 1

- Point 2

- Point 3

(optional) Out of Scope:

Detail about what is specifically not being delivered in the story

Engineering Details:

- (optional) https://github/com/link.to.enhancement/

- (optional) https://issues.redhat.com/link.to.spike

- Engineering detail 1

- Engineering detail 2

![]() This requires/does not require a design proposal.

This requires/does not require a design proposal.

![]() This requires/does not require a feature gate.

This requires/does not require a feature gate.

Issue:

- Kubernetes API on OpenShift requires advanced health check configuration ensure graceful termiantion[1] works correctly. The health check protocol must be HTTPS testing the path /readyz.

- Currently CAPA support standard configuration (health check probe timers) and for path, when using HTTP or HTTPS is selected.

- MCS listener/target must use HTTPS health check with custom probe periods too.

Steps to reproduce:

- Create CAPA cluster

- Check the Target Groups created to route traffic to API (6443), for both Load Balancers (int and ext)

Actual results:

- TCP health check w/ default probe config

Expected results:

- HTTPS health check evaluating /readyz path

- Check parameters: 2 checks in the interval of 10s, using 10s of timeout

References:

- [1] API graceful termination: https://github.com/openshift/installer/blob/master/docs/dev/kube-apiserver-health-check.md

- documentation: https://docs.openshift.com/container-platform/4.15/installing/installing_platform_agnostic/installing-platform-agnostic.html#installation-load-balancing-user-infra_installing-platform-agnostic

- Current terraform setup: https://github.com/openshift/installer/blob/master/data/data/aws/cluster/vpc/master-elb.tf#L86-L94

Goal:

- As OCP developer I would like to deploy OpenShift using the installer/CAPA to provision the infrastructure setting the health check parameters to satisfy the existing terraform implementation

Issue:

- The API listeners is created exposing different health check attributes than the terraform implementation. There is a bug to fix to the correct path when using health check protocol HTTP or HTTPS (

CORS-3289) - The MCS listener, created using the AdditionalListeners option from the *LoadBalancer, requires advanced health check configuration to satisfy terraform implementation. Currently CAPA sets the health check with standard TCP or default path ("/") when using HTTP or HTTPS protocol.

Steps to reproduce:

- Create CAPA cluster

- Check the Target Groups created to route traffic to MCS (22623), for internal Load Balancers

Actual results:

- TCP or HTTP/S health check w/ default probe config

Expected results:

- API listeners with target health check using HTTPS evaluating /healthz path

- MCS listener with target health check using HTTPS evaluating /healthz path

- Check parameters: 2 checks in the interval of 10s, using 10s of timeout

References:

- [1] API graceful termination: https://github.com/openshift/installer/blob/master/docs/dev/kube-apiserver-health-check.md

- documentation: https://docs.openshift.com/container-platform/4.15/installing/installing_platform_agnostic/installing-platform-agnostic.html#installation-load-balancing-user-infra_installing-platform-agnostic

- Current terraform setup: https://github.com/openshift/installer/blob/master/data/data/aws/cluster/vpc/master-elb.tf#L86-L94

CAPA creates 4 security groups:

$ aws ec2 describe-security-groups --region us-east-2 --filters "Name = group-name, Values = *rdossant*" --query "SecurityGroups[*].[GroupName]" --output text rdossant-installer-03-tvcbd-lb rdossant-installer-03-tvcbd-controlplane rdossant-installer-03-tvcbd-apiserver-lb rdossant-installer-03-tvcbd-node

Given that the maximum number of SGs in a network interface is 16, we should update the max number validation in the installer:

https://github.com/openshift/installer/blob/master/pkg/types/aws/validation/machinepool.go#L66

Patrick says:

I think we want to update this to cap the user limit to 10 additional security groups:

More context: https://redhat-internal.slack.com/archives/C68TNFWA2/p1697764210634529?thread_ts=1697471429.293929&cid=C68TNFWA2

when installconfig.platform.aws.userTags is specified, all taggable resources should have the specified user tags.

- Manifests:

- cluster

- machines

- Non-capi provisioned resources:

- IAM roles

- Load balancer resources

- DNS resources (private zone is tagged)

- Check compatibility of how CAPA provider is tagging bootstrap S3 bucket

When using Wavelength zones, networks are cidr'd differently than in vanilla installs. Ensure wavelength support

Private hosted zone and cross-account shared vpc works when installconfig.platform.aws.hostedZone is specified

AWS Local zone support works as expected when local zones are specified

The schema check[1] in the LB reconciliation is hardcoded to check the primary Load Balancer only, it will result to always filter the subnets from the schema for the primary, ignoring additional Load Balancers ("SecondaryControlPlaneLoadBalancer")

How to reproduce:

- Create a cluster w/ secondaryLoadBalancer w/ internet-facing

- Check the subnets for the secondary load balancers: "ext" (public) API Load Balancer's subnets

Actual results:

- Private subnets attached to the SecondaryControlPlaneLoadBalancer

Expected results:

- Public subnets attached to the SecondaryControlPlaneLoadBalancer

References:

bootstrap ignition is not deleted when installconfig.platform.aws.preserveBootstrapIgnition is specified

As an Openshift admin i want to leverage /dev/fuse in unprivileged containers so that to successfully integrate cloud storage into OpenShift application in a secure, efficient, and scalable manner. This approach simplifies application architecture and allows developers to interact with cloud storage as if it were a local filesystem, all while maintaining strong security practices.

Epic Goal

- Give users the ability to mount /dev/fuse into a pod by default with the `io.kubernetes.cri-o.Devices` annotation

Why is this important?

- It's the first step in a series of steps that allows users to run unprivileged containers within containers

- It also gives access to faster builds within containers

Scenarios

- as a developer on openshift, I would like to run builds within containers in a performant way

Acceptance Criteria

- CI - MUST be running successfully with tests automated

- Release Technical Enablement - Provide necessary release enablement details and documents.

- ...

Dependencies (internal and external)

- ...

Previous Work (Optional):

- …

Open questions::

- …

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

Epic Goal

- Through this epic, we will update our CI to use a UPI workflow instead of the libvirt openshift-installer, allowing us to eliminate the use of terraform in our deployments.

Why is this important?

- There is an active initiative in openshift to remove terraform from the openshift installer.

Acceptance Criteria

- All tasks within the epic are completed.

Done Checklist

- CI - For new features (non-enablement), existing Multi-Arch CI jobs are not broken by the Epic

- All the stories, tasks, sub-tasks and bugs that belong to this epic need to have been completed and indicated by a status of 'Done'.

As a multiarch CI-focused engineer, I want to create a workflow in `openshift/release` that will enable creating the backend nodes for a cluster installation.

Customer has escalated the following issues where ports don't have TLS support. This Feature request lists all the components port raised by the customer.

Details here https://docs.google.com/document/d/1zB9vUGB83xlQnoM-ToLUEBtEGszQrC7u-hmhCnrhuXM/edit

Currently, we are serving the metrics as http on 9537 we need to upgrade to use TLS

Related to https://docs.google.com/document/d/1zB9vUGB83xlQnoM-ToLUEBtEGszQrC7u-hmhCnrhuXM/edit

Feature Overview (aka. Goal Summary)

Reduce the resource footprint of LVMS in regards to CPU, Memory, Image count and size by collapsing current various containers and deployments into a small number of highly integrated ones.

Goals (aka. expected user outcomes)

- Reduce the resource footprint while keeping the same functionallity

- Provide seemless migration for existing customers{}

Requirements (aka. Acceptance Criteria):

- Ressource Reduction:

- Reduce memory consumption

- Reduce CPU consumption (stressed, idle, requested)

- Reduce container count (to reduce APi/scheduler/crio load)

- Reduce container image sizes (to speed up deployments)

- new version must be functionally equivalent no current feature/function is dropped,

- day1 operations (installation, configuration) must be the same

- day2 operations (updates, config changes, monitoring) must be the same

- seamless migration for existing customers

- special care must be taken with MicroShift, as it uses LVMS in a special way.

Questions to Answer (Optional):

- Do we need some sort of DP/TP release, make it an opt in feature for customers to try?

Out of Scope

tbd

Background

The idea was creating during a ShiftWeek project. Potential saving / reductions are documented here: https://docs.google.com/presentation/d/1j646hJDVNefFfy1Z7glYx5sNBnSZymDjCbUQVOZJ8CE/edit#slide=id.gdbe984d017_0_0

Customer Considerations

Provide any additional customer-specific considerations that must be made when designing and delivering the Feature. Initial completion during Refinement status.

Documentation Considerations

Resource Requirements should be added/updated to the documentation in the requirements section.

Interoperability Considerations

Interoperability with MicroShift is a challenge, as it allows way more detailed configuration of topolvm with direct access to lvmd.conf

Feature Overview (aka. Goal Summary)

Enable developers to perform actions in a faster and better way than before.

Goals (aka. expected user outcomes)

Developers will be able to reduce the time or clicks spent on the UI to perform specific set of actions.

Requirements (aka. Acceptance Criteria):

Search->Resources option should show 5 of the recently searched resources across all sessions by the user.

The recently searched resources should should be clearly visible and separated from rest.

Pinning resources capability should be removed.

Getting Started menu on Add page can be collapsed and expanded

Use Cases (Optional):

Questions to Answer (Optional):

Out of Scope

Background

Customer Considerations

Documentation Considerations

Interoperability Considerations

__

Problem:

Developers have to repeatedly search for resources and pin them separately in order to view details of a resource that have seen in the past.

Goal:

Provide developers with the ability to see the last 5 resources that they have seen in the past so they can quickly view their details without any further actions.

Why is it important?

Provides a better user experience for developers when using the console.

Use cases:

- <case>

Acceptance criteria:

- Same as listed in

OCPSTRAT-1024

Dependencies (External/Internal):

Design Artifacts:

Exploration:

Note:

Description

As a user, I want the console to remember the resources I have recently searched so that I don't have to type the names of the same resources I use frequently in the Search Page.

Acceptance Criteria

- Modify the Search dropdown to add a new section to display the recently searched items in the order they were searched.

Additional Details:

Problem:

The Getting Started menu on Add page can cannot be restored after users click on the "X" symbol when its hidden.

Goal:

Users should be able to collapse and expand the Getting Started menu on Add Page.

Why is it important?

The current behavior causes confusion.

Use cases:

- <case>

Acceptance criteria:

- The Getting Started menu on Add page can be collapsed by clicking on the screen.

- The collapsed Getting Started page can be expanded by clicking on the screen.

- When collapsed, it is visibly available for someone to expand again.

Dependencies (External/Internal):

Design Artifacts:

Exploration:

Note:

Description

As of now, getting started section can be hidden and enable back using the button but user can close that button and it will not show back. It is confusing for the users. So add the expandable section instead of hide and show button similar to Functions List page

Acceptance Criteria

- Update getting Started section to use expandable section in Add page

- Update getting Started section to use expandable section in Cluster tab in overview page in Admin perspective

- Updated the test cases

Additional Details:

Check Functions list page in Dev perspective for the design

Phase 2 Deliverable:

GA support for a generic interface for administrators to define custom reboot/drain suppression rules.

Epic Goal

- Allow administrators to define which machineconfigs won't cause a drain and/or reboot.

- Allow administrators to define which ImageContentSourcePolicy/ImageTagMirrorSet/ImageDigestMirrorSet won't cause a drain and/or reboot

- Allow administrators to define alternate actions (typically restarting a system daemon) to take instead.

- Possibly (pending discussion) add switch that allows the administrator to choose to kexec "restart" instead of a full hw reset via reboot.

Why is this important?

- There is a demonstrated need from customer cluster administrators to push configuration settings and restart system services without restarting each node in the cluster.

- Customers are modifying ICSP/ITMS/IDMS outside post day 1/adding them+

- (kexec - we are not committed on this point yet) Server class hardware with various add-in cards can take 10 minutes or longer in BIOS/POST. Skipping this step would dramatically speed-up bare metal rollouts to the point that upgrades would proceed about as fast as cloud deployments. The downside is potential problems with hardware and driver support, in-flight DMA operations, and other unexpected behavior. OEMs and ODMs may or may not support their customers with this path.

Scenarios

- As a cluster admin, I want to reconfigure sudo without disrupting workloads.

- As a cluster admin, I want to update or reconfigure sshd and reload the service without disrupting workloads.

- As a cluster admin, I want to remove mirroring rules from an ICSP, ITMS, IDMS object without disrupting workloads because the scenario in which this might lead to non-pullable images at a undefined later point in time doesn't apply to me.

Acceptance Criteria

- CI - MUST be running successfully with tests automated

- Release Technical Enablement - Provide necessary release enablement details and documents.

- ...

Dependencies (internal and external)

- ...

Previous Work (Optional):

- …

Open questions::

- …

Done Checklist

- CI - CI is running, tests are automated and merged.

- Release Enablement <link to Feature Enablement Presentation>

- DEV - Upstream code and tests merged: <link to meaningful PR or GitHub Issue>

- DEV - Upstream documentation merged: <link to meaningful PR or GitHub Issue>

- DEV - Downstream build attached to advisory: <link to errata>

- QE - Test plans in Polarion: <link or reference to Polarion>

- QE - Automated tests merged: <link or reference to automated tests>

- DOC - Downstream documentation merged: <link to meaningful PR>

Follow up epic to https://issues.redhat.com/browse/MCO-507, aiming to graduate the feature from tech preview and GA'ing the functionality.

This status was added as a legacy field and isn't currently used for anything, nor should it be there. We'd like to remove this, so:

- we get rid of unused fields

- we can use the proper api conditions types for status's

Feature Overview (aka. Goal Summary)

As a result of Hashicorp's license change to BSL, Red Hat OpenShift needs to remove the use of Hashicorp's Terraform from the installer – specifically for IPI deployments which currently use Terraform for setting up the infrastructure.

To avoid an increased support overhead once the license changes at the end of the year, we want to provision OpenShift on the existing supported providers' infrastructure without the use of Terraform.

This feature will be used to track all the CAPI preparation work that is common for all the supported providers

Use Cases (Optional):

Include use case diagrams, main success scenarios, alternative flow scenarios. Initial completion during Refinement status.